MICROSOFT - OFFICIAL PARTNERSHIP On Jun 29 2021, Microsoft’s Azure Arc officially declared Kublr one of its technology partners.

This document details the architecture of the clusters deployed by Kublr in AWS.

One Azure location in Kublr cluster spec always corresponds to a Resource Group stack created by Kublr in an

Azure subscription in an Azure region specified via the location spec spec.locations[*].azure.azureApiAccessSecretRef and

spec.locations[*].azure.region properties.

Kublr cluster Azure deployment customizations are defined in the Kublr cluster specification

sections spec.locations[*].azure, spec.master.locations[*].azure and spec.nodes[*].locations[*].azure.

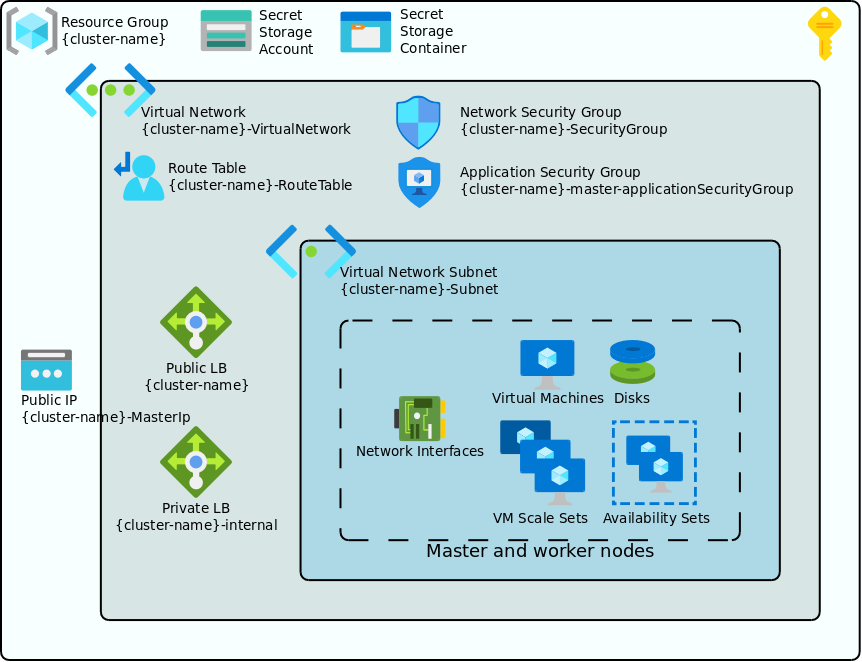

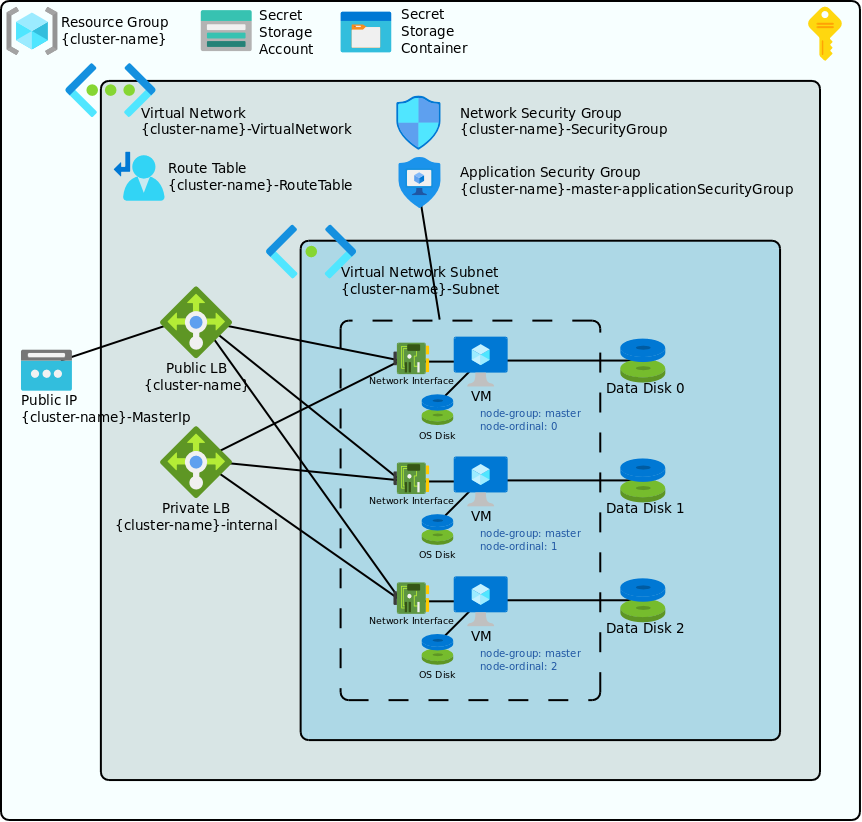

The following diagram shows a typical Azure deployment configuration for a Kublr cluster.

Kublr uses deployments and ARM templates to provision the infrastructure.

The following main components comprise a typical Kublr Azure Kubernetes cluster:

Resource group and subscription

By default Kublr creates a cluster within a single resource group in a single region and under one subscription. It is possible to set up multi-region multi-subscription and multi-resource-group deployments via advanced custom configuration.

The resource group name is by default the same as the cluster name.

Storage account and storage contaner for the cluster secrets exchange

Kublr configures cluster master and worker nodes to run Kublr agent - a service responsible for Kubernetes initialization, configuration, and maintenance. Kublr agents running on different nodes exchange information via so called “secret store”, which is implemented as an Azure storage container in case of Azure deployments.

Virtual network, subnet, and route table

A virtual network is set up with one subnet and a default routing table. It is possible to customize it via Kublr cluster specification to any necessary degree - including additional subnets, specific routing rules, additional security rules, VPN peerings etc.

Network and application security groups

Network security group is set up to ensure the virtual network security.

Public and private load balancers and a public master IP

Kublr sets up a public and a private load balancers with a public master IP by default. This can be customized to e.g. disable public load balancer if a completely private cluster needs to be configured.

Other customization options include using different SKU for load balancers (‘standard’ SKU is used by default) and many others.

Master and worker node groups objects

These objects include all or some of the following objects depending on a corresponding node group type and parameters: virtual machines, managed disks, network interfaces, availability sets, and virtual machine scale sets.

Specific objects created for different group types and node group parameters are described below.

Kublr cluster is fully defined by a cluster specification - a structured document usually in YAML format.

As a cluster can potentially use several infrastructure providers, different providers used by the cluster are abstracted as “locations” in the cluster specification. Each location includes provider-specific parameters.

An Azure location can look as follows in the cluster specification:

spec:

...

locations:

- name: az # the location name used to reference it

azure: # Azure-specific location parameters

azureApiAccessSecretRef: azure-secret # reference to Azure API creds

region: eastus

enableMasterSSH: true

...

---

kind: Secret

metadata:

name: azure-secret

spec:

azureApiAccessKey:

tenantId: '***'

subscriptionId: '***'

aadClientId: '***'

aadClientSecret: '***'

Normally an Azure location in a Kublr cluster specification corresponds to one Azure resource group under a certain subscription, that includes all Azure resources required by the cluster, such as virtual machines, load balancers, virtual network, subnet, etc.

Detailed description of all parameters available in a Kublr cluster specification for an Azure location is available at API Types Reference > AzureLocationSpec.

The main part of a Kubernetes cluster is a compute node that runs containerized applications.

Kublr abstracts Kubernetes nodes as cluster instance groups. There are two main categories of the instance groups in a Kublr Kubernetes cluster: master groups and worker groups.

The master group is a dedicated node groups used to run Kubernetes API server components and etcd cluster. The master group is decribed in spec.master sections of the cluster specification.

The worker groups are node groups used to run application container. The worker groups are decribed in spec.nodes array.

Besides the fact that they are located in different sections of the cluster specification, master and worker group specifications have exactly the same structure documented in the API Types Reference > InstanceGroupSpec.

While generally speaking an instance group is just a set of virtual or physical machines and thus has a lot of common characteristics on different infrastructure providers (such as AWS, Azure, vSphere, GCP etc), certain provider-specific parameters are necessary to customize a group implementation. These parameters are defined in the location[*].<provider> subsection of the group specification. For example, the following is a cluster specification snippet showing a worker group specification with generic and Azure specific parameters:

spec:

... # cluster spec parameters not related to the example are omitted

locations: # cluster may potentially span several locations

- name: azure

azure:

... # Azure-specific parameters for the location

nodes: # an array of worker node groups

- name: example-group1 # worker node group name

stateful: true # groups may be stateful or stateless

autoscaling: false # whether autoscaling is enabled for this group

locations:

- locationRef: azure #

azure:

groupType: VirtualMachineScaleSet # the group type defining the group implementation

zones: ["1", "2"] # Azure-specific parameters

pinToZone: pin # Azure-specific parameters

...

Detailed description of all parameters available in a Kublr cluster specification for Azure instance groups is available at API Types Reference > AzureInstanceGroupLocationSpec.

NOTE Besides specifying group type via specification, you can also do that via the Kublr UI as described here.

Types of Azure node groups:

| Group Type | Stateful/Stateless | Master/Worker | Description |

|---|---|---|---|

| VirtualMachine | Stateful | Master or Worker | A set of standalone virtual machines |

| AvailabilitySet | Stateful | Master or Worker | A set of standalone virtual machines in an Availability Set |

| VirtualMachineScaleSet | Stateful | Master or Worker | A set of VMSS’ (Virtual Machine Scale Sets) each of size 1 |

| VirtualMachineScaleSet | Stateless | Worker only | One VMSS of size defined by the node group size |

| AvailabilitySetLegacy | Stateful | Master or Worker | A set of standalone virtual machines in one cluster-wide Availability Set; the model used by Kublr pre-1.20 |

As shown in the table the only group type that supports stateless groups is VirtualMachineScaleSet. All other group types MUST be stateful.

One cluster can contain multiple groups of different types, although some restrictions are in place when using ‘Basic’ SKU load balancers, see Load Balancers section for more details.

The group type may be omitted in the initial cluster specification submission, in which case Kublr will automatically assign the group type as follows:

| Description | Default group type |

|---|---|

| Master and stateful worker groups with zones | VirtualMachine |

| Master and stateful worker groups without zones | AvailabilitySet |

| Stateless groups | VirtualMachineScaleSet |

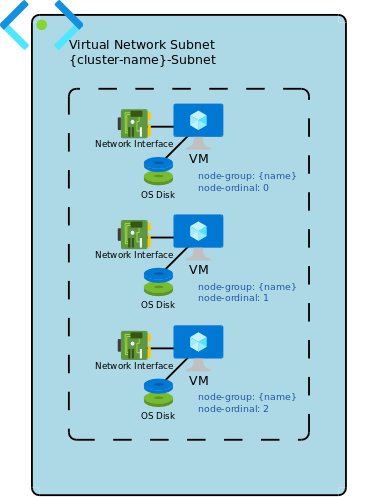

VirtualMachine group type is implemented as a set of regular Azure virtual machines. Each machine tagged with the group name and the unique machine ID within the group (from 0 to max-1).

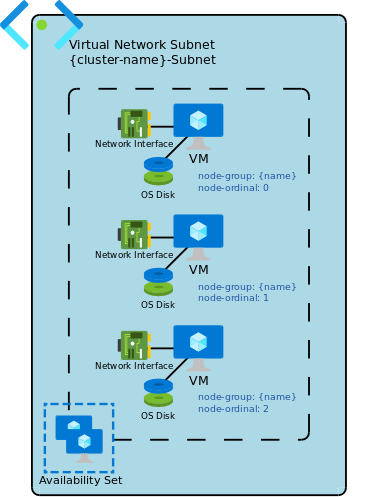

VirtualMachineAvailabilitySet group type is implemented as a set of regular Azure virtual machines in a single availability set. Each machine is tagged with the group name and the machine ID within the group (from 0 to max-1).

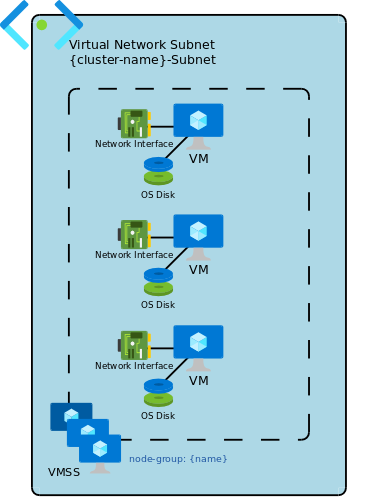

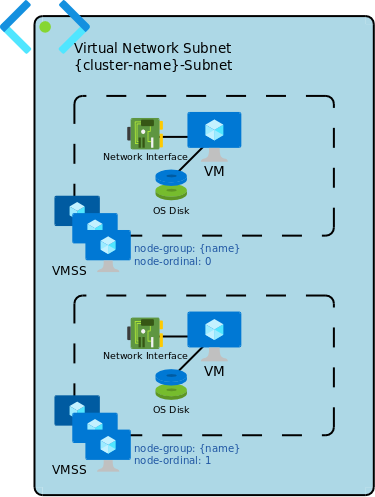

Stateless VirtualMachineScaleSet group type is implemented as a single VMSS of the size between min and max group size, and containing all VMs of the group. The VMSS is tagged with the group name.

Stateful VirtualMachineScaleSet group type is implemented as a set of VMSS each of size 1. Each VMSS is tagged with the group name and the unique machine ID within the group (from 0 to max-1).

While in many aspects master groups are exactly same as worker groups, there are several important differences:

master group name is always ‘master’ - Kublr will enforce this constraint

master group is always stateful

master group size can only be 1, 3, or 5

master group cannot be auto-scaled

persistend data disks are created by Kublr for each master node

Every node, master or worker, has an ephemeral OS root disk that exists only for the lifetime of the node. Master nodes, in addition to OS disks, have fully persistent managed disks used to store etcd and other cluster system data. This makes it possible to delete and restore master nodes / VMs without losing cluster system data.

master VMs may have a public IP and a load balancing rule on the public load balancer to enable public Kubernetes API endpoint (optional)

master VMs may have a load balancing rule on the private load balancer to enable private Kubernetes API endpoint

master VMs may have public NAT rules on the public load balancer to enable SSH access (disabled by default)

All group types are supported for masters (except for stateless VMSS), as well as zones and zone pinning (see Zone Support section for more details).

TBD

TBD

Kublr fully supports Azure Managed Identity for cluster nodes starting with Kublr 1.29.0 release by default.

There are two ways Kublr can configure Kubernetes components to authenticate with Azure cloud API:

Managed Identity approach is considered more secure as there are no credentials passed or stored in clear files anywhere, and the credentials’ secret tokens are automatically regularly rotated by Azure under the hood.

Service Account approach in contrast requires that the static credentials information (tenant ID, subscription ID, client ID, and most critically client secret) are stored in a file on each virtual machine, and cannot be easily and frequently rotated.

The only downside of Managed Identity approach is that certain Azure resources required for it to work cannot be created without additional permissions. As a result, while clusters with Service Account authentication type can be created by Kublr using just Contributor role, creation of clusters using Managed Identity authentication necessitates that User Access Manager (or Owner) role is also assigned to Kublr’s service account.

By default, Kublr 1.29.0 and newer will create clusters with Managed Identity authentication type, with only exceptions being:

Clusters configured with Service Account authentication type can be updated to use Managed Identity at a later time, when additional permissions are granted to the Kublr service account.

It is also possible to create clusters with Managed Identity without giving Kublr service account additional permissions, but that requires that Azure Authorization resources are created and configured manually before the cluster is created and the cluster configured to use them. It is generally a more complicated and advaced scenario and therefore is not recommended.

The following attributes control authentication type in Kublr Kubernetes clusters:

spec:

locations:

- azure:

identityType: ManagedIdentity # ManagedIdentity (default) or ServiceAccount

masterManagedIdentity:

roleAssignmentGUID: ''

secretStoreRoleAssignmentGUID: ''

skip: false

skipRoleAssignment: false

skipSecretStoreRoleAssignment: false

workerManagedIdentity:

roleAssignmentGUID: ''

secretStoreRoleAssignmentGUID: ''

skip: false

skipRoleAssignment: false

skipSecretStoreRoleAssignment: false

armTemplateExtras:

masterUserAssignedIdentity: {}

masterRoleAssignment: {}

masterSecretStoreRoleAssignment: {}

workerUserAssignedIdentity: {}

workerRoleAssignment: {}

workerSecretStoreRoleAssignment: {}

master:

locations:

- azure:

identityType: '' # unset (default), ManagedIdentity, or ServiceAccount

nodes:

- name: group1

locations:

- azure:

identityType: '' # unset (default), ManagedIdentity, or ServiceAccount

Normally most of these attributes stay unset and the only difference you would see in Azure cluster

specifications in Kublr 1.29.0 is the Azure location’s attribute identityType. This attribute will always

be set on a cluster managed by Kublr 1.29+. The only way it may be unset is in a cluster that was created

by a previous version of Kublr after which Kublr was upgraded to 1.29+.

spec:

locations:

- azure:

identityType: ManagedIdentity

Azure Location properties:

identityType - default identity type used for node groups where idenitityType property is not set;

one of ServiceAccount or ManagedIdentitymasterManagedIdentityroleAssignmentGUID - GUID name of the master RoleAssignment resource.

If set, then this GUID is used for Kublr-generated role assignment resource for masters.

If not set - Kublr generates a unique predictable GUID based on the cluster name and subscription ID.secretStoreRoleAssignmentGUID - GUID name of the master secret store storage account RoleAssignment resource.

If set, then this GUID is used for Kublr-generated role assignment resource for masters.

If not set - Kublr generates a unique predictable GUID based on the cluster name and subscription ID.skip - if set to true, then no role assignment or user assigned identity resources are created for mastersskipRoleAssignment - if set to true, then no role assignment resource is created for mastersskipSecretStoreRoleAssignment - if set to true, then no secret store role assignment resource is created

for mastersworkerManagedIdentity - same sub-attributes as for master, but for worker nodes in this locationarmTemplateExtrasmasterUserAssignedIdentity - overrides for master UserAssignedIdentity resource if createdmasterRoleAssignment - overrides for master RoleAssignment if createdmasterSecretStoreRoleAssignment - overrides for master secret store storage account RoleAssignment if createdworker* - same attributes as for master but for worker nodes in this locationInstance Group Azure Location new properties:

identityType - one of ServiceAccount, ManagedIdentity, or unset (default)Instance Group Azure Location existing properties that affect new code/behavior

armTemplateExtras.[scaleSet|virtualMachine].identity.type - can be used by users to override Kublr-generated

identity typearmTemplateExtras.[scaleSet|virtualMachine].identity.userAssignedIdentities.* - can be used by users to add

more user-assigned identities to corresponding groups instancesKublr 1.20 introduced a number of improvements in Azure clusters infrastructure architecture that were not directly compatible with previously used architecture. To maintain compatibility and enable smooth migration from Kublr 1.19 to Kublr 1.20 the pre-1.20 architecture is supported through specific combination of parameters in the cluster specification. Migration of existing pre-1.20 cluster specifications to Kublr 1.20 format is performed automatically on the first cluster update in Kublr 1.20.

The following combination of parameters in Kublr 1.20 allows to achieve compatibility with pre-1.20 Azure archietcture:

spec:

locations:

- azure:

loadBalancerSKU: Basic

enableMasterSSH: true

kublrAgentConfig:

kublr_cloud_provider:

azure:

vm_type: ''

master:

locations:

- azure:

groupType: AvailabilitySetLegacy

masterLBSeparate: true

nodes:

- locations:

- azure:

groupType: AvailabilitySetLegacy

A pre-1.20 Kublr Azure Kubernetes cluster can be imported into a KCP 1.20+ or can be used after KCP upgrade to 1.20+ as follows:

It is still recommended to migrate a pre-1.20 Kublr Azure Kubernetes cluster to a new Azure architecture as soon as convenient. Use the procedure described on the support portal Migrate Pre-Kublr-1.20 Azure Cluster Architecture to 1.20 Architecture for migration.

Kublr creates Azure infrastructure by translating the Kublr cluster specification into an Azure ARM template using a number of build-in templates and rules.

It is possible to customize the resources generated for the ARM template via the cluster specification in a very wide range.

This section describes the customization principles.

When creating an Azure Kublr Kubernetes cluster, Kublr creates a resource group for the cluster, and then applies the generated ARM template to that resource group using a Deployment object. You can access that deployment object and review the ARM template.

The ARM template include a number of resources as described in the Deployment Layout section.

Some of these resources are created per-cluster (e.g. private and public load balancer, NAT gateway, virtual network, storage account etc), others are created per node group (e.g. virtual machines, VMSS etc).

Properties of each Azure resource in the ARM template are by default generated based on the cluster specification; but it is also possible to override (almost) any property of those resources, or add any properties that are not generated.

Moreover, it is required for many advanced use-cases (e.g. spot instances use and others).

Properties of the resources generated per-cluster can be customized via

spec.locations[*].azure.armTemplateExtras section of the cluster specification.

Properties of the resources generated per-node group can be customized via

spec.master.locations[*].azure.armTemplateExtras and spec.nodes[*].locations[*].azure.armTemplateExtras

sections of the cluster specification for the corresponding node group, master or worker.

For example, by default the following ARM template resource will be generated for a cluster virtual network:

-

type: Microsoft.Network/virtualNetworks

apiVersion: 2020-08-01

name: "[variables('k8sVirtualNetwork')]"

location: "[parameters('region')]"

dependsOn:

- "[resourceId('Microsoft.Network/networkSecurityGroups', variables('k8sSecurityGroup'))]"

- "[resourceId('Microsoft.Network/routeTables', variables('k8sRouteTable'))]"

- "[resourceId('Microsoft.Network/natGateways', variables('k8sNatGateway'))]"

tags:

KublrIoCluster-my-cluster: owned

properties:

addressSpace:

addressPrefixes:

- 172.16.0.0/16

subnets:

-

name: "[variables('k8sVirtualNetworkSubnet')]"

properties:

addressPrefix: 172.16.0.0/16

natGateway:

id: "[resourceId('Microsoft.Network/natGateways', variables('k8sNatGateway'))]"

networkSecurityGroup:

id: "[resourceId('Microsoft.Network/networkSecurityGroups', variables('k8sSecurityGroup'))]"

routeTable:

id: "[resourceId('Microsoft.Network/routeTables', variables('k8sRouteTable'))]"

You can customize this definition by providing additional and override properties via

spec.locations[*].azure.armTemplateExtras.virtualNetwork and

spec.locations[*].azure.armTemplateExtras.subnet sections in the Kublr cluster specification.

For example the following snippet in the cluster specification will result in an additional address perfix and subnet added to the virtual network and an address prefix overridden for the default subnet.

spec:

locations:

- name: default

azure:

virtualNetworkSubnetCidrBlock: 172.20.0.0/16

armTemplateExtras:

# everything specified in this section will be directly merged into

# the virtual network resource definition created by Kublr

virtualNetwork:

properties:

addressSpace:

addressPrefixes:

# this address prefix will be used in addition to 172.20.0.0/16

# defined above in virtualNetworkSubnetCidrBlock property

- 172.21.0.0/16

subnets:

# a custom subnet will be included in addition to the standard subnet

# created by Kublr

- name: "my-custom-subnet"

properties:

addressPrefix: 172.21.3.0/24

# this example custom subnet uses the security group and

# the route table created by Kublr

# but it can also use other security group and/or route table

networkSecurityGroup:

id: "[resourceId('Microsoft.Network/networkSecurityGroups', variables('k8sSecurityGroup'))]"

routeTable:

id: "[resourceId('Microsoft.Network/routeTables', variables('k8sRouteTable'))]"

# everything specified in this section will be directly merged into

# the default subnet resource definition created by Kublr within the

# virtual network resource

subnet:

properties:

addressPrefix: 172.20.32.0/20

Note that the overriding properties structure must be that of a corresponding Microsoft Azure ARM resource as documented in Microsoft Azure API and ARM documentation:

The following table includes all per-cluster ARM template resources customizable using this method:

| Kublr Azure Resource | Kublr cluster spec property under spec.locations[*].azure.armTemplateExtras | Since Kublr Version |

|---|---|---|

| Secret store storage account | storageAccount | 1.20 |

| Secret store storage account blob service | blobService | 1.20 |

| Secret store storage account blob service container | container | 1.20 |

| Virtual network | virtualNetwork | 1.20 |

| Default subnet | subnet | 1.20 |

| Default route table | routeTable | 1.20 |

| Default network security group | securityGroup | 1.20 |

| Security rule for master SSH access | securityRuleMastersAllowSSH | 1.20 |

| Security rule for master API access | securityRuleMastersAllowAPI | 1.20 |

| Default NAT gateway | natGateway | 1.20 |

| Default NAT gateway public IP | natGatewayPublicIP | 1.20 |

| Public load balancer | loadBalancerPublic | 1.20 |

| Public load balancer public IP | loadBalancerPublicIP | 1.20 |

| Public load balancer master API frontend IP config | loadBalancerPublicFrontendIPConfig | 1.20 |

| Public load balancer master API backend address pool | loadBalancerPublicBackendAddressPool | 1.20 |

| Public load balancer master API rule | loadBalancerPublicRule | 1.20 |

| Public load balancer master API probe | loadBalancerPublicProbe | 1.20 |

| Private load balancer | loadBalancerPrivate | 1.20 |

| Private load balancer master API frontend IP config | loadBalancerPrivateFrontendIPConfig | 1.20 |

| Private load balancer master API backend address pool | loadBalancerPrivateBackendAddressPool | 1.20 |

| Private load balancer master API rule | loadBalancerPrivateRule | 1.20 |

| Private load balancer master API probe | loadBalancerPrivateProbe | 1.20 |

| (deprecated) Legacy (pre-1.20) master availability set | availabilitySetMasterLegacy | 1.20 |

| (deprecated) Legacy (pre-1.20) worker availability set | availabilitySetAgentLegacy | 1.20 |

The following table includes all per-node group ARM template resources customizable using this method:

| Kublr Azure Resource | Kublr cluster spec property under spec.master.locations[*].azure.armTemplateExtras or spec.nodes[*].locations[*].azure.armTemplateExtras | Since Kublr Version |

|---|---|---|

| OS disk of types ‘vhd’ or ‘managedDisk’ (ignored for disk of type ‘image’) | osDisk | 1.20 |

| Master etcd data disks (ignored for non-master groups) | masterDataDisk | 1.20 |

| Group availability set (ignored for VMSS and VM groups) | availabilitySet | 1.20 |

| Network interfaces (for non-VMSS groups) or network interface profiles in scale sets (for VMSS groups) | networkInterface | 1.20 |

| IP configurations in the network interface/network interface profile resources | ipConfiguration | 1.20 |

| Virtual machines (ignored for VMSS groups) | virtualMachine | 1.20 |

| Virtual machine scale set (ignored for non-VMSS groups) | scaleSet | 1.20 |

Additional Azure ARM template resources may be included in the generated ARM template

via spec.locations[*].azure.armTemplateExtras.resources array section of the Kublr

cluster specification.

Here is an example of adding a virtual network peering as an additional resource via Kublr cluster spec:

spec:

locations:

- azure:

armTemplateExtras:

resources:

- apiVersion: '2019-11-01'

location: '[parameters(''region'')]'

name: '[concat(variables(''k8sVirtualNetwork''), ''/peering1'')]'

type: Microsoft.Network/virtualNetworks/virtualNetworkPeerings

properties:

allowForwardedTraffic: true

allowVirtualNetworkAccess: true

remoteVirtualNetwork:

id: '/subscriptions/abcd0123-4566-89ab-cdef-0123456789ab/resourceGroups/cluster2/providers/Microsoft.Network/virtualNetworks/cluster2-VirtualNetwork'

Any number of additional resources my be added.

The following properties in spec.locations[*].azure.armTemplateExtras section allow specifying

additional ARM template variables, additional ARM template functions, additional ARM template outputs,

and apiProfile value.

See the structure and syntax of ARM templates documentation for more information about these properties.

| ARM Template property | Kublr cluster spec property under spec.locations[*].azure.armTemplateExtras | Type | Since Kublr Version |

|---|---|---|---|

| API profile | apiProfile | string | 1.20 |

| Variables | variables | map | 1.20 |

| Functions | functions | array | 1.20 |

| Outputs | outputs | map | 1.20 |

Example:

spec:

locations:

- azure:

armTemplateExtras:

apiProfile: latest

variables:

var1: var1-value

var2: var2-value

functions:

- namespace: contoso,

members:

uniqueName:

parameters:

- name: namePrefix

type: string

output:

type: string

value: "[concat(toLower(parameters('namePrefix')), uniqueString(resourceGroup().id))]"

outputs:

hostname:

type: string

value: "[reference(resourceId('Microsoft.Network/publicIPAddresses', variables('publicIPAddressName'))).dnsSettings.fqdn]"

Each resource specified in an ARM template requires Azure API version specified.

Kublr tries to use latest API versions available at the moment corresponding Kublr version is released.

At the same time it is possible that in some situations certain or all resource API versions need to be overridden. It is possible to do on per-type and per-resource basis.

If API version is overridden for both type and a specific resource, the resource override takes precedence.

Per-type overrides are specified in spec.locations[*].azure.armTemplateExtras.apiVersions

section of the cluster spec; per-resource overrides are specified in the corresponding resource

property (see the previous sections).

spec.locations[*].azure.armTemplateExtras.apiVersions section is available since Kublr 1.24.

Override example:

spec:

locations:

- azure:

armTemplateExtras:

apiVersions:

# API version override for all resources of type 'Microsoft.Network/virtualNetworks'

# generated by Kublr

'Microsoft.Network/virtualNetworks': 2021-08-01

virtualNetwork:

# API version override for a specific virtual network resource generated by Kublr

apiVersion: 2021-03-02