This document details the architecture of the clusters deployed by Kublr in AWS.

One AWS location in Kublr cluster spec always corresponds to a single CloudFormation stack created by Kublr in an

AWS account in an AWS region specified via the location spec spec.locations[*].aws.awsApiAccessSecretRef and

spec.locations[*].aws.region properties.

As the AWS API access secret includes specification of a partition, the region must correspond to it. For example, if the AWS API access secret used is for AWS Gov cloud partition, the region must also be from the AWS Gov cloud.

AWS account ID may also be specified in the spec.locations[*].aws.accountId property. If it is left empty, it will

be automatically populated with the account ID corresponding to the provided access key.

Kublr cluster AWS network customizations are defined in the Kublr cluster specification

sections spec.locations[*].aws, spec.master.locations[*].aws and spec.nodes[*].locations[*].aws.

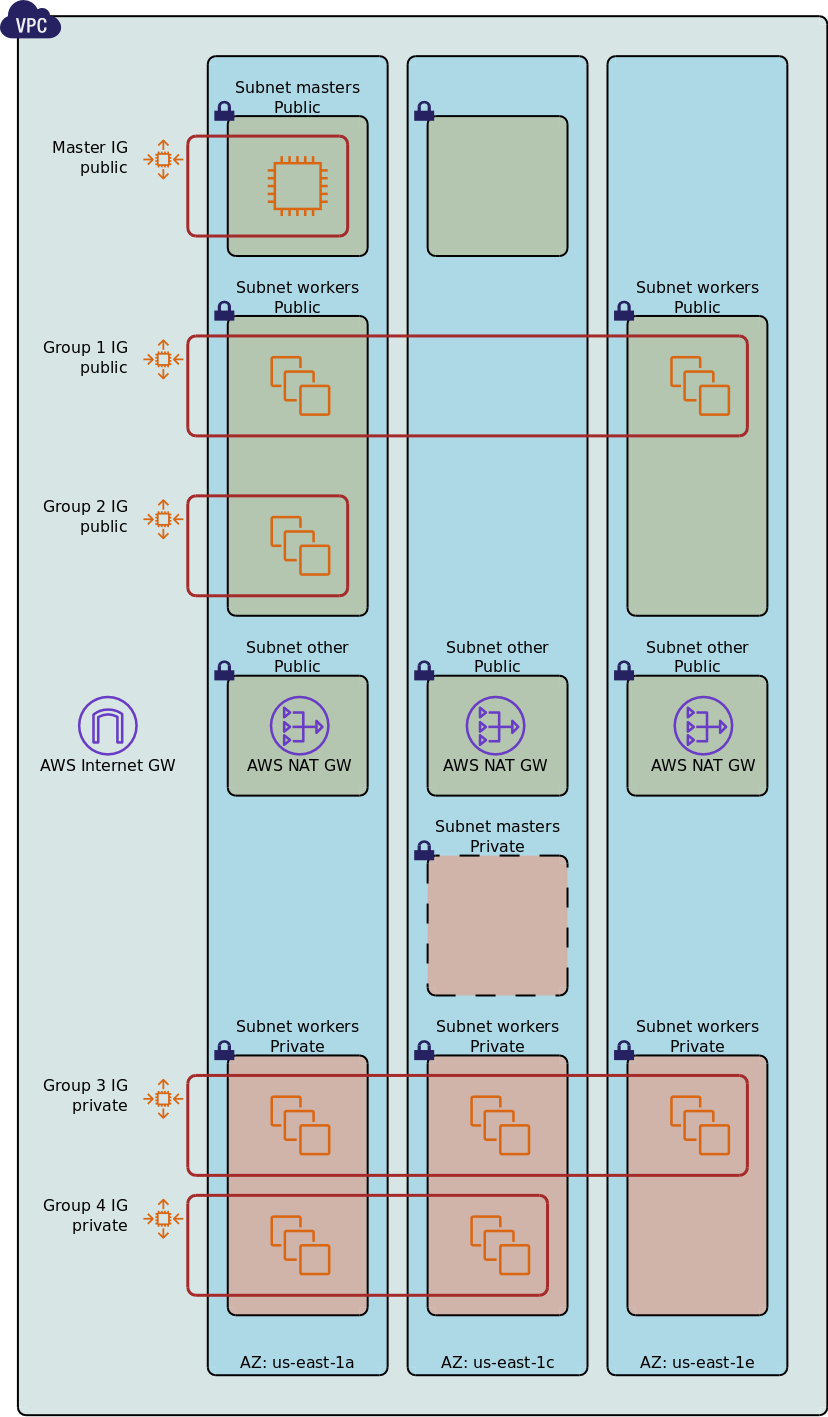

The following diagram shows a typical AWS network configuration for a Kublr cluster.

By default Kublr will create a new VPC in that CloudFormation stacks, although this may be overridden and

an existing VPC may be used by specifying the existing VPC ID in the location’s vpcId property.

The automatically created VPC has NewVpc CloudFormation logical name and therefore may be referred as { Ref: NewVpc }.

By default Kublr will create the following subnets:

Master public subnets

These subnets are only created

nodeIpAllocationPolicy property is unset or

NOT private), ORnodeIpAllocationPolicy property is private)

AND a public load balancer(s) for the master(s) is created (via masterElbAllocationPolicy or

masterNlbAllocationPolicy or both properties).One master public subnet is created for each availability zone specified for the master group in the group’s

locations[0].aws.availabilityZones property. By default, if this property is not specified, the list of

the availability zones is taken from the corresponding AWS location.

Master private subnets

These subnets are only created if the master group is declared private in the cluster spec (nodeIpAllocationPolicy

property is set to private value).

One master private subnet is created for each availability zone specified for the master group. By default, if this property is not specified, the list of the availability zones is taken from the corresponding AWS location.

Worker public subnets

These subnets are only created if there are public worker groups in the cluster spec.

One worker public subnet is created for each availability zone for which a public worker group exists. By default, if this property is not specified, the list of the availability zones is taken from the corresponding AWS location.

Public worker subnets are shared by all public worker groups that have presence in a corresponding availability zone.

Worker private subnets

These subnets are only created if there are private worker groups in the cluster spec.

One worker private subnet is created for each availability zone for which a private worker group exists. By default, if this property is not specified, the list of the availability zones is taken from the corresponding AWS location.

Private worker subnets are shared by all private worker groups that have presence in a corresponding availability zone.

“Other” public subntes

These subnets are created for NAT gateways and public ELB enpoints to ensure instances in the private groups access to and from the internet.

One “other” public subnet is created for each private subnet.

The automatically generated subnets have CloudFormation logical names in the format

Subnet{Master|Node|Other}{Public|Private}{AZ#}. Here “AZ#” is the number of a corresponding availability zone, so that

for example AZ us-east-1a has number 0, us-east-1b - 1, us-east-1c - 2, etc.

Thus here are examples of subnets Kublr may generate for a cluster:

SubnetMasterPublic3 - a public master subnet for AZ us-east-1d (or a corresponding AZ in a different region).SubnetNodePrivate2 - a private workers subnet for AZ us-east-1c (or a corresponding AZ in a different region).SubnetOtherPublic0 - a public NAT subnet for AZ us-east-1a (or a corresponding AZ in a different region).See the section Pre-existing Subnets below for advanced use-cases.

Whether a private or a public subnet is used for a specific instance group (master or worker) is defined by the

instance group’s locations[*].aws.nodeIpAllocationPolicy property.

The property can take three values:

default (same as privateAndPublic)privateprivateAndPublicExamples:

spec:

master:

locations:

- aws:

# The master group is public.

# Masters will run in SubnetMasterPublic* subnets.

nodeIpAllocationPolicy: privateAndPublic

nodes:

- locations:

- aws:

# This worker group is private.

# These instances will run in SubnetNodePrivate* subnets.

nodeIpAllocationPolicy: private

- locations:

- aws:

# This worker group is public.

# These instances will run in SubnetNodePublic* subnets.

nodeIpAllocationPolicy: privateAndPublic

A single Kublr location of AWS type may span over several availability zones.

The list of zones is specified in the spec.locations[*].aws.availabilityZones field. If this field is left empty,

it will be populated with the list of all availability zones accessible under the specified AWS account in the specified

AWS region.

In addition a separate list of availability zones may be specified for each instance group (master or worker) in

the properties spec.master.locations[*].aws.availabilityZones and spec.nodes[*].locations[*].aws.availabilityZones.

These lists MUST always be subsets of the list of AZs specified in the corresponding AWS location.

Allocation of master and worker nodes over the availability zones and subnets depends on the instance group type; see more details in the sections Stateless and stateful instance groups.

Examples:

spec:

locations:

# AWS location specification

- name: aws1

aws:

# this location in the cluster is limited to 3 AZs

availabilityZones:

- us-east-1a

- us-east-1c

- us-east-1d

# master group specification

master:

maxNodes: 3 # this is 3-master cluster

locations:

- locationRef: aws1

aws:

availabilityZones:

- us-east-1a # master0 is in us-east-1a

- us-east-1a # master1 is in us-east-1a

- us-east-1c # master2 is in us-east-1c

# worker groups specifications

nodes:

- name: group1

locations:

- locationRef: aws1

aws:

# this group's instances will be distributed over 2 AZs

availabilityZones:

- us-east-1a

- us-east-1c

- name: group2

locations:

- locationRef: aws1

aws:

availabilityZones:

- us-east-1b # ERROR! this AZ is not specified in the location's AZ list

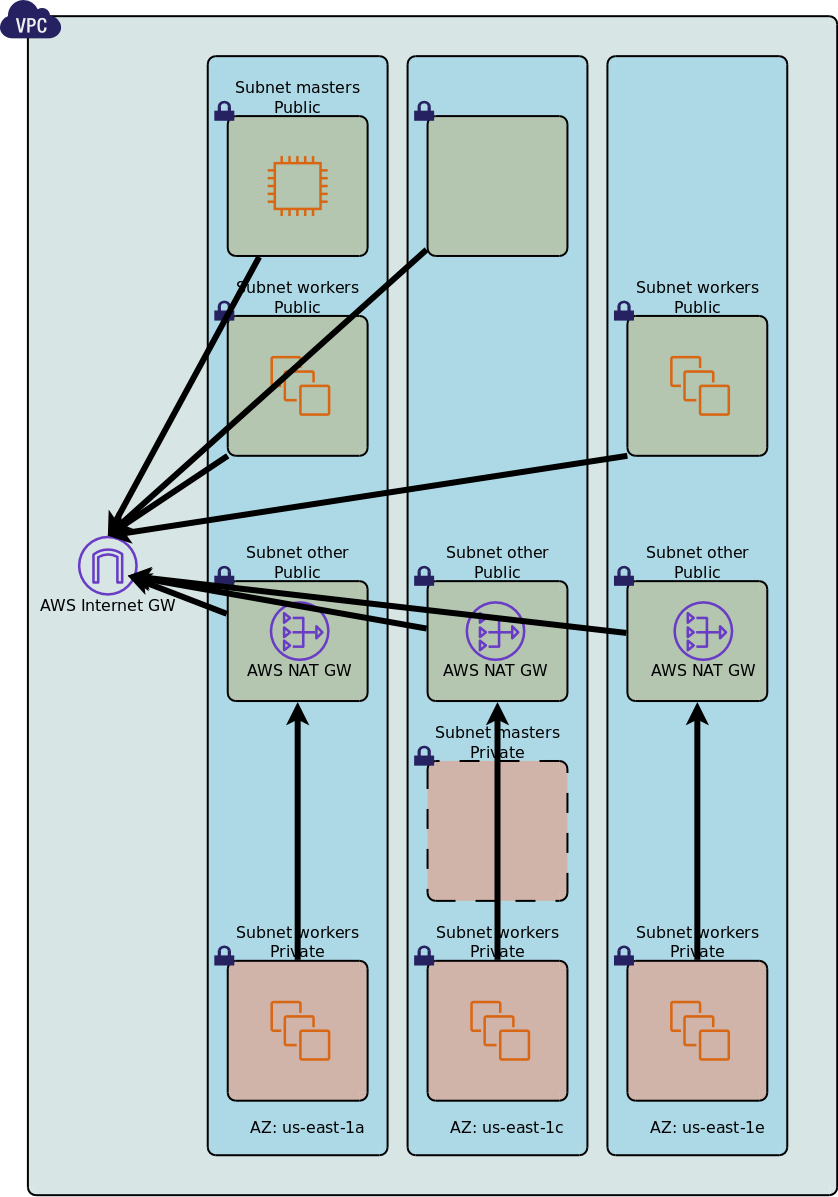

By default Kublr creates an Internet Gateway with a CloudFormation logical name Gw for a VPC. The gateway is required

for VMs inside the VPC to access the public internet. This is true for NAT gateways as well. See the section

Fully private VPC without NAT and IGW below for more details on building

fully private clusters.

Kublr creates one private subnet for each AZ in which there are/is (a) private node group(s).

Such private subnets require a NAT gateway created in a public subnet.

NAT gateways are created by Kublr by default in every AZ where worker or master nodes may run in private instance groups.

The NAT gateways are placed in corresponding SubnetOtherPublc{AZ#} subnets

While a NAT gateway for a private subnet does not have to be in the same AZ, if the NAT gateway is in a different AZ, the private subnet internet accessibility is vulnerable to the NAT gateway AZ failures.

By default Kublr will create NAT gateway in each AZ with private node groups.

spec.locations[*].aws.natAvailabilityZones property allows overriding the default behavior. When natAvailabilityZones

property is specified, for each AZ availabilityZones[i] NAT gateway from the AZ

natAvailabilityZones[i % len(natAvailabilityZones)] will be used. So for example

natAvailabilityZones == ['us-east-1c'], then a single NAT gateway in AZ ‘us-east-1c’

will be used for all private subnets.natAvailabilityZones == ['us-east-1c', 'us-east-1a'], and

availabilityZones == ['us-east-1a', 'us-east-1b', 'us-east-1d'] then NAT gateways in AZs

‘us-east-1c’, ‘us-east-1a’, and ‘us-east-1c’ (again) will be used for private subnets in AZs

‘us-east-1a’, ‘us-east-1b’, and ‘us-east-1d’ correspondingly.natAvailabilityZones is undefined, null or empty, NAT gateways will be created in each

AZ with private subnets and private subnet in each AZ will be setup with a NAT gateway in

the same AZ.NAT gateway creation can also be completely disabled via spec.locations[*].aws.natMode property.

NAT mode can be legacy, multi-zone or none (default: multi-zone for new clusters, legacy for

pre-existing ones):

legacy mode is supported for compatibility with AWS clusters created by pre-1.19 Kublr releases;multi-zone mode is the default for all new cluster.none mode is used to avoid automatic creation of NAT gateways.Migration from legacy to multi-zone is possible but may affect the cluster public egress addresses,

requires manual operation, and cannot be easily rolled back.

With legacy NAT mode only one NAT gateway is created in one of the availability zone, which is not

AZ fault tolerant. Public subnet used for the NAT gateway in legacy mode can change depending on the

configuration of master and worker node groups, which may prevent CloudFormation stack from updating in

some situations. NAT gateways created in multi-zone mode do not create any issues with any configuration

changes in the clusters, thus never preventing CloudFormation stacks from updating.

Examples:

Skip creating NAT gateways (note that the cluster creation will most probably fail in this case if special measures not taken to enable loading docker images from a private repository accessible from the nodes in the private subnets

spec:

locations:

# AWS location specification

- name: aws1

aws:

natMode: none

The following routing tables are created by Kublr by default:

Public subnet routing table

One routing table is created for all public subnets with an internet route via the Internete gateway.

Private subnet routing tables

For each availability zone in which there are private subnets (master or worker), one private routing tables is created with an internet route pointing at a corresponding NAT gateway.

Master and worker node instance groups in the Kublr cluster specification are mapped onto AWS AutoScaling groups.

Specific AWS objects created for an instance group depend on several instance group parameters:

stateful - true/false, specifying whether this group is stateful

Stateful groups are mapped onto as many AWS AutoScaling Groups of size one as the size of the group.

Stateful instance groups have fixed size (minNodes, initialNodes and maxNodes must be the same), do not support

autoscaling, but allow to maintain each node’s identity over time and across restarts.

Each node in a stateful group will be assined a unique number from 0 to <group-size> - 1 called “ordinal”. The node’s

ordinal is passed to Kubernetes as a node label kublr.io/node-ordinal.

Master group is always stateful.

Stateless (non-stateful) groups are mapped onto a single AWS AutoScaling Group of the same (and potentially varying, if autoscaling is enabled) size as specified for the instance group.

Stateless instance groups may have different minNodes and maxNodes values, and support autoscaling.

locations[*].aws.groupType - the AWS archintecture of the instance group. The following values are supported:

asg-lt - Auto-Scaling Group with a Launch Template

asg-mip - Auto-Scaling Group with a Launch Template and a Mixed Instance Policy

asg-lc - Auto-Scaling Group with a Launch Configuration

elastigroup - Spotinst Elastigroup (see the section Integration with spotinst elastigroups

for more information).

asg (deprecated) - same as asg-lc, used in the clusters created by Kublr 1.17 and earlier

Note: For Kublr pre-1.19 releases only asg and elastigroup options are supported.

locations[*].aws.pinToZone - whether instances in this instance group are pinned to a specific Availability Zone or not.

Possible values:

pin - instances are pinned to a specific AZ from the availabilityZones list.

Due to the fact that only stateful group instances have persistent identity (node ordinal), this value only makes sense and is only allowed for stateful groups.

span - instances are not pinned to a specific AZ and can be created in any availability

zone from the availabilityZones list.

This value may be specified for any non-master stateless or stateful group.

default (default value) - is the same as pin for stateful groups (including the master group, which is always stateful);

and span for all the other groups (i.e. non-master stateless groups).

TBD: illustration here

The following instance group properties may be used to customize master and worker instance groups (the properties

may be specified in the spec.master section for the master group, and spec.nodes[*] sections for the worker

node groups):

minNodes, initialNodes, maxNodes - instance group size.

Note that for the master group, and more generally for any stateful group these properties MUST all have the same value.

autoscaling - true/false, if enabled, the AWS autoscaling groups is tagged so that it can be indentified by

the Kubernetes node autoscaler.

locations[*].aws.nodeIpAllocationPolicy - whether this group is considered private or public.

The property can take three values:

default (same as privateAndPublic) - public groupprivate - private groupprivateAndPublic - public groupThis property defines whether the instances in this group will be started in private or public subnets, and whether public IPs will be associated with them.

locations[*].aws.sshKey - specifies the AWS managed SSH key to be provisioned for the instances in this instance

group. If left undefined, not SSH key will be provisioned.

locations[*].aws.instanceType - specifies the AWS instance type to be used for this instance group (e.g. t3.medium etc).

See the sesction Autoscaling Groups and Instance Group Types for more

detail on using AWS mixed instance type policy with Kublr instance groups.

locations[*].aws.overrideImageId, locations[*].aws.imageRootDeviceName - specifies the AWS AMI image ID

and the image root device name for this instance group. If imageRootDeviceName is left empty (recommended),

Kublr will automatically populate correct value from the AMI metadata.

locations[*].aws.rootVolume, locations[*].aws.masterVolume - specifies the root EBS volume and the master data

(etcd data) parameters for the instances in this instance group. The masterVolume property is only taken into

account for the master instance group, and is ignored for worker instance groups.

Both properties are structures with the following properties with the direct correspondance to CloudFormation EBS Volume structure properties:

type - one of the EBS Volume types, e.g. gp2, io1, io2, sc1, st1, standard etc.size - size in GiBsiops - provisioned IOPS per GiBencrypted - the flag indicates if EBS volume should be encryptedkmsKeyId - ARN or alias of the AWS KMS master key used to create the encrypted volume.snapshotId - optional snapshot ID to create EBS volume fromdeleteOnTermination - deleteOnTermination property for ASG EBS mapping volumesproperties directly passed to the AWS AutoScaling Group objects corresponding to this instance group in the CloudFormation template:

cooldown - stringloadBalancerNames - array of structurestargetGroupARNs - array of structuresproperties directly passed to the AWS Launch Configuration and/or Launch Template objects corresponding to this instance group in the CloudFormation template:

blockDeviceMappings - array of structuresebsOptimized - booleaninstanceMonitoring - booleanplacementTenancy - stringspotPrice - stringIn addition to specifying whether public IP addresses should be assigned to instances in Kublr instance groups, Kublr allows specifying whether these public IP addressed should be static AWS elastic IPs (EIPs).

This may be customized via spec.master.locations[*].aws.eipAllocationPolicy property.

Allowed values:

none - do not create and assign EIP to the instances of this grouppublic - create and assign EIP to the instances of this groupdefault - use the following automatic behaviour:none for private multi-master groupsnone for private single-master groups (nodeIpAllocationPolicy === 'private')public for public single-master groups (nodeIpAllocationPolicy !== 'private')none for private stateful worker groups (nodeIpAllocationPolicy === 'private' && stateful === true)public for public stateful worker groups (nodeIpAllocationPolicy === 'private' && stateful === true)none for non-stateful worker groups (nodeIpAllocationPolicy === 'private' && stateful === true)Constraints:

eipAllocationPolicy may not be set to public if the group is private (nodeIpAllocationPolicy === 'private'),

Kublr will generate an error in this case.eipAllocationPolicy may not be set to public if the group is not stateful (stateful === false)Examples:

spec:

master:

locations:

- aws:

eipAllocationPolicy: public # assign EIPs to the master(s)

nodes:

- stateful: true

locations:

- aws:

eipAllocationPolicy: public # assign EIPs to the workers in this public stateful instance group

- stateful: false

locations:

- aws:

eipAllocationPolicy: public # ERROR! the group is not stateful

- stateful: true

locations:

- aws:

nodeIpAllocationPolicy: private

eipAllocationPolicy: public # ERROR! the group is not public

It is important for Kubernetes clusters to have stable addresses/endpoints for the master node(s). In Kublr AWS clusters it is implemented via AWS load balancers and elastic IPs.

By default Kublr automatically creates public load balancers for masters in multi-master clusters, and assigns public EIP to the master in single-master clusters.

This default behavior may be customized via several properties in the custom cluster specification.

spec.master.locations[*].aws.masterNlbAllocationPolicy

Allowed values:

none - do not create NLB for mastersprivate - create a private NLB for masterspublic - create a public NLB for mastersprivateAndPublic - create both public and private NLBs for mastersauto - same as public for a multi-master cluster, and none for a single-master clusterdefault - same as auto, deprecatedThe property is ignored in non-master instance groups.

By default (value empty):

spec.master.locations[*].aws.masterElbAllocationPolicy

Allowed values:

none - do not create ELB for mastersprivate - create a private ELB for masterspublic - create a public ELB for mastersprivateAndPublic - create both public and private ELBs for mastersauto - same as public for a multi-master cluster, and none for a single-master clusterdefault - same as auto, deprecatedThis property is ignored in non-master instance groups.

By default (value empty):

The use of ELB for masters is deprecated, prefer NLB

spec.master.locations[*].aws.eipAllocationPolicy property may be used to customize EIP assignement

to master instances.

By default this property will assign EIPs to public single-master master groups, an will not assign EIP in all other cases (private or multi-master master groups). See the section Automatic Elastic IP management above for more detail on the property use.

In AWS when a public load balancer is used to access instances in private subnets, public subnets in the corresponding availability zones must be created. Kublr uses public master subnets for this purpose. As a result, in a cluster that is configured with private masters and (a) public load balancer(s) Kublr will by default generate public master subnets automatically in addition to the private master subnets in which masters are started.

Custom public subnets may be specified for public master load balancers via spec.master.locations[*].aws.privateMasterPublicLbSubnetIds

property. This property will be ignored in any other situation (e.g. for a non-master group, or for public groups,

or when no public ELB or NLB is created).

privateMasterPublicLbSubnetIds values may be string IDs of specific subnets, or objects allowed for inclusion into

a CloudFormation stack templates, such as { Ref: MySubnet }

It is important to remember that if a subnet ID is specified in a certain position of privateMasterPublicLbSubnetIds array,

a correct AZ in which this subnet is located MUST also be specified in corresponding position of availabilityZones array.

Examples:

all possible master endpoints enabled (public/private ELB/NLB, and EIPs)

spec:

master:

locations:

- aws:

eipAllocationPolicy: public

masterNlbAllocationPolicy: privateAndPublic

masterElbAllocationPolicy: privateAndPublic

only create private NLB for public masters

spec:

master:

locations:

- aws:

eipAllocationPolicy: none

masterNlbAllocationPolicy: private

masterElbAllocationPolicy: none

use a custom public subnet for publc LBs in one of the AZs for a private master group

spec:

master:

locations:

- aws:

nodeIpAllocationPolicy: private # private master groups

masterNlbAllocationPolicy: public # create a public NLB

availabilityZones: # masters in the following 2 zones

- us-east-1b

- us-east-1d

privateMasterPublicLbSubnetIds:

- '' # regular Kublr-generated SubnetMasterPublic1 for AZ us-east-1b

- subnet-78178267345 # a custom public subnet for AZ us-east-1d

By default Kublr tags automatically created AWS subnets for the worker and master nodes to ensure proper Kubernetes AWS cloud provider integration for Kubernetes Services of the LoadBalancer type.

See AWS Kubernetes load balancing documentation for more details on subnet tagging for Kubernetes on AWS.

Default tagging created by Kublr may be overridden via the cluster specification using propeties

spec.master.locations[*].aws.availabilityZoneSpec and spec.nodes[*].locations[*].aws.availabilityZoneSpec.

The following example shows configuration of load balancer tagging policy for subnets generated by Kublr.

spec:

nodes:

- name: mynodegroup

locations:

- aws:

availabilityZoneSpec:

us-east-1a: # availability zone name

subnetMasterPublic: # category of subnets generated by Kublr

serviceLoadBalancerPublicPolicy: force # public load balancer tagging policy

serviceLoadBalancerInternalPolicy: force # private load balancer tagging policy

subnetMasterPrivate: { ... }

subnetNodePublic: { ... }

subnetNodePrivate: { ... }

subnetOtherPublic: { ... }

The policy properties can take values auto, disable or force.

The policy defines whether to tag corresponding subnets with "kubernetes.io/role/elb": "1" and

"kubernetes.io/role/internal-elb": "1" as documented in

AWS EKS documentation.

By default (when the policy is not defined or set to auto) Kublr will check that at least one private

subnet per availability zone for internal load balancers, and one public subnet per availability zone

for public load balancers are tagged either by user (with force policy) or automatically in

the following order of priority:

otherPublic, nodePublic, masterPublicnodePrivate, masterPrivateWhen a policy is set to disable or force, the default behaviour will be overridden

correspondingly - the tags will or will NOT be set as specified by user.

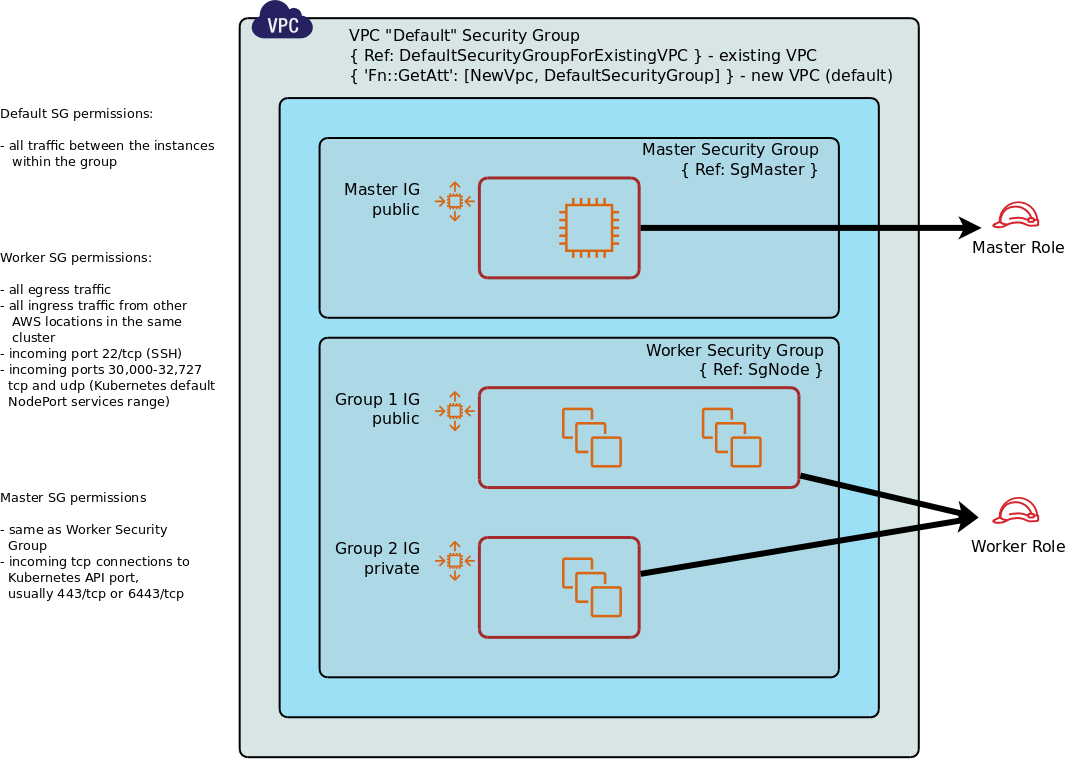

By default Kublr creates a number of resources related to the cluster network security:

By default Kublr creates two AIM Instance Profiles with corresponding IAM Roles assigned separately to master nodes and worker nodes.

Roles created by default:

Role assigned to master nodes with CloudFormation logical name: RoleMaster

Role path/name: /kublr/<cluster-name>-<location-name>-master

(for example /kublr/mycluster-aws1-master)

Role assigned to worker nodes with CloudFormation logical name: RoleNode

Role path/name: /kublr/<cluster-name>-<location-name>-master

(for example /kublr/mycluster-aws1-master)

Instance Profiles created by default:

Master instance profile with CloudFormation logical name: ProfileMaster

Worker instance profile with CloudFormation logical name: ProfileNode

The default permissions assigned to the roles are as follows:

Master nodes role default permissions:

Policies:

- PolicyName: root

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: 's3:*'

Resource:

- !Sub 'arn:${AWS::Partition}:s3:::<cluster-secret-storage-S3-bucket>'

- !Sub 'arn:${AWS::Partition}:s3:::<cluster-secret-storage-S3-bucket>/*'

- Effect: Allow

Action:

- 'ec2:*'

- 'elasticloadbalancing:*'

- 'iam:CreateServiceLinkedRole'

- 'ecr:GetAuthorizationToken'

- 'ecr:BatchCheckLayerAvailability'

- 'ecr:GetDownloadUrlForLayer'

- 'ecr:GetRepositoryPolicy'

- 'ecr:DescribeRepositories'

- 'ecr:ListImages'

- 'ecr:BatchGetImage'

- 'autoscaling:DescribeTags'

- 'autoscaling:DescribeAutoScalingGroups'

- 'autoscaling:DescribeLaunchConfigurations'

- 'autoscaling:DescribeAutoScalingInstances'

- 'autoscaling:SetDesiredCapacity'

- 'autoscaling:TerminateInstanceInAutoScalingGroup'

- 'rds:DescribeDBInstances'

- 'cloudformation:DescribeStackResources'

- 'cloudformation:DescribeStacks'

- 'iam:CreateServiceLinkedRole'

- 'kms:DescribeKey'

Resource: '*'

- PolicyName: LogRolePolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'logs:*'

Resource:

- !Sub 'arn:${AWS::Partition}:logs:${AWS::Region}:${AWS::AccountId}:log-group:${LogGroup}:*'

- Effect: Allow

Action:

- 'logs:DescribeLogGroups'

Resource:

- !Sub 'arn:${AWS::Partition}:logs:${AWS::Region}:${AWS::AccountId}:log-group::log-stream:'

Worker nodes role default permissions:

Policies:

- PolicyName: root

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: 's3:*'

Resource:

- !Sub 'arn:${AWS::Partition}:s3:::<cluster-secret-storage-S3-bucket>'

- !Sub 'arn:${AWS::Partition}:s3:::<cluster-secret-storage-S3-bucket>/*'

- Effect: Allow

Action:

- 'ec2:Describe*'

- 'ec2:CreateSnapshot'

- 'ec2:CreateTags'

- 'ec2:DeleteSnapshot'

- 'ec2:AttachVolume'

- 'ec2:DetachVolume'

- 'ec2:AssociateAddress'

- 'ec2:DisassociateAddress'

- 'ec2:ModifyInstanceAttribute'

- 'ecr:GetAuthorizationToken'

- 'ecr:BatchCheckLayerAvailability'

- 'ecr:GetDownloadUrlForLayer'

- 'ecr:GetRepositoryPolicy'

- 'ecr:DescribeRepositories'

- 'ecr:ListImages'

- 'ecr:BatchGetImage'

- 'autoscaling:DescribeTags'

- 'autoscaling:DescribeAutoScalingGroups'

- 'autoscaling:DescribeLaunchConfigurations'

- 'autoscaling:DescribeAutoScalingInstances'

- 'autoscaling:SetDesiredCapacity'

- 'autoscaling:TerminateInstanceInAutoScalingGroup'

- 'rds:DescribeDBInstances'

- 'cloudformation:DescribeStackResources'

- 'cloudformation:DescribeStacks'

Resource: '*'

- PolicyName: LogRolePolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'logs:*'

Resource:

- !Sub 'arn:${AWS::Partition}:logs:${AWS::Region}:${AWS::AccountId}:log-group:${LogGroup}:*'

- Effect: Allow

Action:

- 'logs:DescribeLogGroups'

Resource:

- !Sub 'arn:${AWS::Partition}:logs:${AWS::Region}:${AWS::AccountId}:log-group::log-stream:'

Default Kublr IAM Roles and Instance Profiles behavior may be customized via several properties in the custom cluster specification.

spec.locations[*].aws.iamRoleMasterPathName - if defined, then an existing AWS IAM Role with the specified path will

be used for masters nodes. Kublr will not create the role for master nodes and will use the provided one instead.

spec.locations[*].aws.iamInstanceProfileMasterPathName - if defined, then an existing AWS IAM Instance Profile with

the specified path will be used for masters nodes. Kublr will not create the profile for master nodes and will use the

provided one instead.

spec.locations[*].aws.iamRoleNodePathName - if defined, then an existing AWS IAM Role with the specified path will

be used for worker nodes. Kublr will not create the role for worker nodes and will use the provided one instead.

spec.locations[*].aws.iamInstanceProfileNodePathName - if defined, then an existing AWS IAM Instance Profile with

the specified path will be used for worker nodes. Kublr will not create the profile for worker nodes and will use the

provided one instead.

spec.locations[*].aws.iamRoleMasterCloudFormationExtras (pre-1.24 Kublr) / spec.locations[*].aws.cloudFormationExtras.iamRoleMaster

(Kublr 1.24+) - additional parameters and policies for the IAM Role for master nodes.

The object defined in this property will be merged into the CloudFormation definition of the master nodes IAM Role definition generated by Kublr.

This property may be used in particular to provide additional permission policies for the master nodes.

spec.locations[*].aws.iamRoleNodeCloudFormationExtras (pre-1.24 Kublr) / spec.locations[*].aws.cloudFormationExtras.iamRoleNode

(Kublr 1.24+) - additional parameters and policies for the IAM Role for worker nodes.

The object defined in this property will be merged into the CloudFormation definition of the worker nodes IAM Role definition generated by Kublr.

This property may be used in particular to provide additional permission policies for the worker nodes.

Examples:

use a custom role for master nodes:

spec:

locations:

- aws:

iamRoleMasterPathName: '/my-custom-master-role'

provide additional permissions for worker nodes:

spec:

locations:

- aws:

cloudFormationExtras:

iamRoleNode:

Properties:

Policies:

- PolicyName: allow-rds

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'rds:*'

Resource: '*'

By default Kublr explicitely creates two Security Groups for the cluster master and worker nodes, and one default VPC Security Group is ceated implicitely with the cluster VPC.

Default Security Group - a security group into which all cluster nodes - master and worker - are included

When Kublr is responsible for VPC creation, corresponding VPC default SG will be used as the default.

Refer by { "Fn::GetAtt" : [ "NewVpc", "DefaultSecurityGroup" ] }

When Kublr is deployed into an existing VPC specified in the Kublr cluster spec by spec.locations[*].aws.vpcId

property, Kublr will create a separate default security group with the CloudFormation logical name DefaultSecurityGroupForExistingVPC.

Refer by { "Ref" : "DefaultSecurityGroupForExistingVPC" }.

Default permissions:

all traffic between any instances within this SG

SG Rule logical name since Kublr 1.21: SgDefaultIngressAllFromSg

(since Kublr 1.21) all egress traffic

SG Rule logical name since Kublr 1.21: SgDefaultEgressAllToAll

(since Kublr 1.21) all ingress traffic from other AWS locations in the same cluster (this is rarely used - only for multi-location clusters)

SG Rule logical name since Kublr 1.21: SgDefaultIngressAllFromLocation<AWS-Account-ID><AWS-Region><Kublr-location-name>

(since Kublr 1.21) incoming tcp and udp connections to ports 30,000-32,727 from any address (Kubernetes default NodePort services range)

SG Rule logical names since Kublr 1.21: SgDefaultIngressNodePortTCPFromAll and SgDefaultIngressNodePortUDPFromAll

Worker Security Group - a security group into which cluster worker nodes are included

CloudFormation Logical Name: SgNode, refer by { Ref: SgNode }

Default permissions:

(before Kublr 1.21) all egress traffic

(before Kublr 1.21) all ingress traffic from other AWS locations in the same cluster (this is rarely used - only for multi-location clusters)

(before Kublr 1.21) incoming tcp and udp connections to ports 30,000-32,727 from any address (Kubernetes default NodePort services range)

(before Kublr 1.21) incoming tcp connections to port 22/tcp from any address (SSH)

Starting with Kublr 1.21 this rule is disabled by default and can be enabled by

spec.locations[*].aws.enableSecurityGroupRulesRegexp property (see the section below on customization).

SG Rule logical name since Kublr 1.21: SgNodeIngressSSHFromAll.

Master Security Group - a security group into which cluster master nodes are included

CloudFormation Logical Name: SgMaster, refer by { Ref: SgMaster }

Default permissions:

(before Kublr 1.21) all egress traffic

(before Kublr 1.21) all ingress traffic from other AWS locations in the same cluster (this is rarely used - only for multi-location clusters)

(before Kublr 1.21) incoming tcp and udp connections to ports 30,000-32,727 from any address (Kubernetes default NodePort services range)

(before Kublr 1.21) incoming tcp connections to port 22/tcp from any address (SSH)

Starting with Kublr 1.21 this rule is disabled by default and can be enabled by

spec.locations[*].aws.enableSecurityGroupRulesRegexp property (see the section below on customization).

SG Rule logical name since Kublr 1.21: SgMasterIngressSSHFromAll.

incoming tcp connections to Kubernetes API port, usually 443/tcp or 6443/tcp

SG Rule logical name since Kublr 1.21: SgMasterIngressKubeTLSFromAll.

Default Kublr Security Groups behavior may be customized via several properties in the custom cluster specification.

spec.locations[*].aws.skipSecurityGroupDefault - if defined and set to true, then the default security group

will not be created by Kublr when deployed into an existing VPC.

spec.locations[*].aws.skipSecurityGroupMaster - if defined and set to true, then the master security group

will not be created by Kublr.

spec.locations[*].aws.skipSecurityGroupDefault - if defined and set to true, then the worker security group

will not be created by Kublr.

These three properties are usually used in conjunction with the properties spec.locations[*].aws.existingSecurityGroupIds,

spec.master.locations[*].aws.existingSecurityGroupIds, and spec.nodes[*].locations[*].aws.existingSecurityGroupIds.

spec.locations[*].aws.existingSecurityGroupIds - list of additional security groups to add to all cluster nodes.

spec.master.locations[*].aws.existingSecurityGroupIds - list of additional security groups to add to the cluster

master nodes.

Notes:

spec.nodes[*].locations[*].aws.existingSecurityGroupIds - list of additional security groups to add to the nodes

of a specific node group in the cluster.

Notes:

(since Kublr 1.21) spec.locations[*].aws.skipSecurityGroupRulesRegexp - regexp to disable security group

rules enabled by default; rules which CloudFormation logical names match the provided regexp (if specified) will be disabled.

(since Kublr 1.21) spec.locations[*].aws.enableSecurityGroupRulesRegexp - regexp to enable security group

rules disabled by default; rules which CloudFormation logical names match the provided regexp (if specified) will be enabled.

Examples:

replace a standard Kublr workers security group with a custom Security Group:

spec:

locations:

- name: aws1

aws:

# a custom security group included in the cluster spec as an extra resource

cloudFormationExtras:

resources:

SgMyCustomSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: { Ref: 'AWS::StackName' }

VpcId: { Ref: NewVpc }

Tags:

- Key: Name

Value: { 'Fn::Sub': [ '${AWS::StackName}-sg-my-custom-security-group' ] }

SecurityGroupEgress:

- CidrIp: 0.0.0.0/0

IpProtocol: -1

FromPort: 0

ToPort: 65535

SecurityGroupIngress:

- CidrIp: 0.0.0.0/0

IpProtocol: -1

FromPort: 0

ToPort: 65535

# include all instances into the custom security group

existingSecurityGroupIds:

- { Ref: SgMyCustomSecurityGroup }

add a custom Security Group Rule to a Kublr-generated Security Group:

spec:

locations:

- name: aws1

aws:

# custom security group rules included in the cluster spec as extra resources

cloudFormationExtras:

resources:

MyCustomSgRuleForWorkers:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: { Ref: SgNode }

CidrIp: 0.0.0.0/0

IpProtocol: tcp

FromPort: 2000

ToPort: 3000

MyCustomSgRuleForMasters:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: { Ref: SgMaster }

CidrIp: 0.0.0.0/0

IpProtocol: tcp

FromPort: 4000

ToPort: 4000

MyCustomSgRuleForAllNodes:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: { 'Fn::GetAtt': [NewVpc, DefaultSecurityGroup] }

CidrIp: 0.0.0.0/0

IpProtocol: tcp

FromPort: 5000

ToPort: 5000

MyCustomSgRuleForAllNodesInClusterWithExistingVPC:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: { Ref: DefaultSecurityGroupForExistingVPC }

CidrIp: 0.0.0.0/0

IpProtocol: tcp

FromPort: 5000

ToPort: 5000

(since Kublr 1.21) enable SSH access to masters and nodes, and disable default Kubernetes API and NodePort access rules (usually a replacement rule should be specified to allow KCP access to the cluster API)

spec:

locations:

- name: aws1

aws:

enableSecurityGroupRulesRegexp: '(Master|Node).*SSH'

skipSecurityGroupRulesRegexp: 'KubeTLS|NodePort'

VPC IP CIDR block for a VPC created by Kublr for an AWS location is defined in the property

spec.locations[*].aws.vpcCidrBlock.

If left empty by the user, it will be set by Kublr automatically to 172.16.0.0/16.

If more than one AWS location is defined for the cluster, then the following values will be used sequentially for

locations with index 1, 2, 3 etc: 172.18.0.0/16, 172.19.0.0/16, 172.20.0.0/16, 172.21.0.0/16, 172.22.0.0/16,

172.23.0.0/16, 172.24.0.0/16, 172.25.0.0/16, 172.26.0.0/16, 172.27.0.0/16, 172.28.0.0/16, 172.29.0.0/16,

172.30.0.0/16, 172.31.0.0/16.

CIDR block allocation for subnets in a specific AWS location is defined by the data structure stored in the

spec.locations[*].aws.cidrBlocks property.

The property has the following structure (see the Auto-generated Subnets section for more details on the subnets usage):

spec:

locations:

- aws:

...

cidrBlocks:

masterPublic:

- ''

- '<cidr for master public subnet in corresponding availability zone>'

...

masterPrivate:

- ''

- '<cidr for master private subnet in corresponding availability zone>'

...

nodePublic:

- ''

- '<cidr for worker public subnet in corresponding availability zone>'

...

nodePrivate:

- ''

- '<cidr for worker private subnet in corresponding availability zone>'

...

otherPublic:

- ''

- '<cidr for other public subnet in corresponding availability zone>'

...

CIDR blocks in the arrays masterPublic, masterPrivate, nodePublic, nodePrivate and otherPublic are specified

according to availability zone indices.

Availability zone index is the index of the zone in the list of all possible zones in this region, ordered in a standard

lexicographical order. E.g. zones us-east-1a, us-east-1c, and us-east-1d have indices 0, 2, and 3 correspondingly.

Therefore, for example, if three public masters are defined, and two masters are placed in the zone us-east-1b (zone

index is 1) and one master is placed in the zone us-east-1d (zone index is 3), then at least the following CIDRs

must be specified:

spec:

locations:

- aws:

...

cidrBlocks:

masterPublic:

- ''

- '<cidr for master subnet in zone us-east-1b>'

- ''

- '<cidr for master subnet in zone us-east-1d>'

Each value in these arrays must either be a valid CIDR or an empty string (if unused or undefined).

If certain CIDR blocks are not specified in the cluster specification, Kublr will use its own rules trying to allocate those CIDR blocks that are required for specified node groups.

It a CIDR block is specified in the cluster specification (either by the user, or automatically by Kublr when the cluster was created), Kublr will not try to update this value, but it will validate that the CIDR blocks used by the cluster subnets do not intersect.

Automatic CIDR allocation rules work as follows:

Split the VPC CIDR provided in spec.locations[*].aws.cidrBlocks into 2 equal subranges, and reserve the first one

for public subnets and the second one for private subnets.

Split the public CIDR subrange into 8 equal subranges and use the first one for public master and other subnets, and the other 7 ranges for public worker AZ subnets in the AZs with indices 0 through 6.

Split the public master and other CIDR subrange into 8 equal subranges and use the first one for public non-master/auxilary subnets, and the other 7 ranges for public master AZ subnets in the AZs with indices 0 through 6.

Split the private CIDR subrange into 8 equal subranges and use the first one for private master and other subnets, and the other 7 ranges for private worker AZ subnets in the AZs with indices 0 through 6.

Split the private master and other CIDR subrange into 8 equal subranges, of which the first one is reserved for custom applications and not used by Kublr, and the other 7 ranges are used for private master AZ subnets in the AZs with indices 0 through 6.

So for example for the default value 172.16.0.0/16 of spec.locations[0].aws.cidrBlocks, the subranges allocated by Kublr

automatically according to these rules will be as follows:

172.16.0.0/16 (64K IPs):172.16.0.0/17 - reserved for public subnets (32K IPs)172.16.0.0/20 - reserved for public master and other subnets (4K IPs)172.16.0.0/23 - reserved for public non-master/auxilary subnets (512 IPs)172.16.0.0/26 - reserved and not used by Kublr (64 IPs)172.16.0.64/26, … , 172.16.1.192/26 - allocated for otherPublic (zones 0, 1, … , 6; 64 IPs each) (7 * 64 IPs)172.16.2.0/23, … , 172.16.14.0/23 - allocated for masterPublic (zones 0, 1, … , 6; 512 IPs each) (7 * 512 IPs)172.16.16.0/20, … , 172.16.112.0/20 - allocated for nodePublic (zones 0, 1, … , 6; 4K IPs each) (7 * 4K IPs)172.16.128.0/17 - reserved for private subnets (32K IPs)172.16.128.0/20 - reserved for private master and other subnets (4K IPs)172.16.128.0/23 - reserved and not used by Kublr (512 IPs)172.16.130.0/23, … , 172.16.142.0/23 - allocated for masterPrivate (zones 0, 1, … , 6; 512 IPs each) (7 * 512 IPs)172.16.144.0/20, … , 172.16.240.0/20 - allocated for nodePrivate (zones 0, 1, … , 6; 4K IPs each) (7 * 4K IPs)Starting with Kublr 1.19 it is possible to include addtional CloudFormation resources into the cluster deployment via Kublr cluster specification document.

It is done via spec.locations[*].aws.resourcesCloudFormationExtras (pre-Kublr 1.24) / spec.locations[*].aws.cloudFormationExtras.resources

(Kublr 1.24+) property of map type.

Deprecation notice: In Kublr 1.24 spec.locations[*].aws.resourcesCloudFormationExtras

property is deprecated in favor of spec.locations[*].aws.cloudFormationExtras.resources. The deprecated

resourcesCloudFormationExtras can still be used, but its content will automatically be migrated into

the new spec.locations[*].aws.cloudFormationExtras.resources properties on cluster update.

Include any objects into this property, and they will be included “as is” into the resulting CloudFormation template generated by Kublr for the cluster.

The following example shows including an additional security group resource:

spec:

locations:

- name: aws1

aws:

# a custom security group included in the cluster spec as an extra resource

cloudFormationExtras:

resources:

SgMyCustomSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: { Ref: 'AWS::StackName' }

VpcId: { Ref: NewVpc }

Tags:

- Key: Name

Value: { 'Fn::Sub': [ '${AWS::StackName}-sg-my-custom-security-group' ] }

SecurityGroupEgress:

- CidrIp: 0.0.0.0/0

IpProtocol: -1

FromPort: 0

ToPort: 65535

SecurityGroupIngress:

- CidrIp: 0.0.0.0/0

IpProtocol: -1

FromPort: 0

ToPort: 65535

A nubmber of AWS resources generated by Kublr by default can be customized using default properties overide mechanism. Override properties are included into Kublr cluster specification in the same format AWS CloudFormation template spec expects, and the overrides are included by Kublr into the CloudFormation template “as is”.

Kublr support article lists all resources that can be overridden.

By default Kublr sets a number of standard AWS tags on AWS resources that support it:

AWS::AutoScaling::AutoScalingGroupAWS::EC2::DHCPOptionsAWS::EC2::EIP (starting with Kublr 1.24.0)AWS::EC2::InternetGatewayAWS::EC2::NatGatewayAWS::EC2::RouteTableAWS::EC2::SecurityGroupAWS::EC2::SubnetAWS::EC2::VolumeAWS::EC2::VPCAWS::EC2::VPCEndpointAWS::ElasticLoadBalancing::LoadBalancerAWS::ElasticLoadBalancingV2::LoadBalancerAWS::ElasticLoadBalancingV2::TargetGroupAWS::IAM::RoleAWS::Logs::LogGroupAWS::S3::BucketThe following standard tag are set:

| Tag | Scope | Value |

|---|---|---|

Name | all resources | <cluster-name>-<location-name>-<resource-specific-name> |

KubernetesCluster | all resources | <cluster-name> |

kubernetes.io/cluster/<cluster-name> | all resources | owned |

kublr.io/node-group | instance group | <group-name> |

kublr.io/node-ordinal | stateful instance group | <instance-number> |

k8s.io/cluster-autoscaler/enabled | autoscaled instance group | yes |

kublr.io/eip | stateful instance group with EIP enabled | <ip-address> |

k8s.io/role/master | master instance group | 1 |

k8s.io/role/node | worker instance group | 1 |

kubernetes.io/role/elb | subnet | 1 |

kubernetes.io/role/internal-elb | subnet | 1 |

It is possible to specify additional tags that will be added to all AWS resources

via spec.locations[*].aws.cloudFormationExtras.tags property (available starting

with Kublr 1.24.0).

Tags can also be added to or overridden in specific AWS resources via resource properties overriding mechanism described in the previous section:

spec:

locations:

- name: aws1

aws:

cloudFormationExtras:

# these tags will be added to all AWS resources generated by Kublr for this cluster

tags:

- Key: custom-global-tag

Value: custom-global-tag-value

# this section specifies custom property and tags overides for VPC resource only

vpc:

Properties:

Tags:

- Key: custom-global-tag

Value: custom-global-tag-overridden-value

- Key: custom-vpc-tag

Value: custom-vpc-tag-value

To keep Kublr scalable and responsive all Kublr clusters are created using AWS Autposcaling groups. A user can assign one of the following three group types to each the instance group. Depending on the group type different AWS resources will be created for the instance group.

| Group type | AWS Resources |

|---|---|

asg-lt | ASG with Launch Template |

asg-mip | ASG with Launch Template and Mixed Instances Policy |

asg-lc | ASG with Launch Configuration |

A Launch Template is the default for most use cases since that and what AWS recommends for ASG parameterization. A Mixed Instances Policy is a subclass of a launch template which allows some more flexibility in the kind of instances that are running.

Pre-Kublr 1.19 group types were limited to AWS Launch Configuration, but that has now been updated to Launch Template. Learn more about this here.

If you want to override Kublr properties, you need to add the overriding value to the appropriate extras field under customized specification.

There is one extra field that can be applied to any location and 3 that are tied to a specific grouptype.

| Resource | Override field |

|---|---|

| ASG | spec.nodes[*].locations[*].aws.asgCloudFormationExtras |

| Mixed Instance Policy | spec.nodes[*].locations[*].aws.mixedInstancesPolicyCloudFormationExtras |

| Launch Template | spec.nodes[*].locations[*].aws.launchTemplateDataCloudFormationExtras |

| Launch Configuration | spec.nodes[*].locations[*].aws.launchConfigurationPropertiesCloudFormationExtras |

Starting with Kublr 1.24 these fields are deprecated in favor of more consistent structure:

| Resource | Override field in Kublr 1.24 |

|---|---|

| ASG | spec.nodes[*].locations[*].aws.cloudFormationExtras.autoScalingGroup |

| Mixed Instance Policy | spec.nodes[*].locations[*].aws.cloudFormationExtras.autoScalingGroup.Properties.MixedInstancesPolicy |

| Launch Template | spec.nodes[*].locations[*].aws.cloudFormationExtras.launchTemplate |

| Launch Configuration | spec.nodes[*].locations[*].aws.cloudFormationExtras.launchConfiguration |

The deprecated properties can still be used, but overrides specified there will be automatically moved into the new properties of the cluster spec on cluster update.

Some things of note are:

spec.nodes[*].locations[*].aws.grouptype;

extras specified in the cluster specification for incopatible resource types will be ignored.launchTemplateDataCloudFormationExtras may be used together with mixedInstancesPolicyCloudFormationExtras in

groups of type asg-mip.launchTemplateDataCloudFormationExtras is mutually exclusive with launchConfigurationPropertiesCloudFormationExtras,

for each specific node group either one or the other is used.Some examples of using this mechanism are described in the following support articles:

It is possible to configure a Kublr cluster so that is uses pre-existing subnets instead of creating subnets automatically.

This is done via the instance groups’ property locations[*].aws.subnetIds.

By default this property is omitted, and subnets for Kublr instances are created as described in the section Auto-generated subnets above.

Non-default subnet allocation may be specified for each instance group (both master and workers) by setting

some of the elements of subnetIds array.

Elements of the array correspond to the availability zones in this group’s availabilityZones array, so if

a subnet is specified in a certain position of the subnetIds array, this subnet MUST be in the same AZ

as the AZ specified in the same position in the group’s availabilityZones array.

Empty or undefined value in a position of subnetIds array means that Kublr with use the auto-generated

subnet for this group’s instances in the corresponding availability zone.

For example, if availabilityZones is ['us-east1a', 'us-east-1c', 'us-east-1d'] and subnetIds is

['subnet1', '', { Ref: CustomSubnet3 } ], then generator will assume that subnet1 exists in AZ us-east-1a,

{ Ref: CustomSubnet3 } exists in us-east-1d, and the group’s instances starting in us-east-1c will

run in a subnet generated by Kublr.

Note also that if a subnet id is specified in a certain position of subnetIds array, a correct

AZ in which this subnet is located MUST also be specified in corresponding position of availabilityZones

array.

subnetIds values may be string IDs of specific subnets, or objects allowed for inclusion into

a Cloudformation stack templates, such as { Ref: MySubnet }.

Kublr allows creating clusters in fully private VPCs that completely block access to and from the public internet due to the fact that all subnets in the cluster are private and no AWS Internet and NAT Gateways are created in the cluster, as well as public internet routes are not created.

Note that for Kublr to be able to provision a Kubernetes cluster in this configuration, it is the user’s responsibility to ensure network connectivity between the private cluster and the Kublr Control Plane provisioning the cluster, as well as the cluster instances’ access to an image and a binary repository hosting Kublr and Kubernetes images and binaries (by default the public repositories are used).

Complete isolation from the public internet may be achived by using the following properties in Kublr AWS location object:

spec.location[*].aws.skipInternetGateway - true/false, undefined (same as false) by default

If true - skip automatic creation of AWS Internet Gateway for the VPC

spec.location[*].aws.natMode

NAT mode can be legacy, multi-zone or none (default: multi-zone for new clusters, legacy for

pre-existing ones):

legacy mode is supported for compatibility with AWS clusters created by pre-1.19 Kublr releases;multi-zone mode is the default for all new cluster.none mode is used to avoid automatic creation of NAT gateways.Migration from legacy to multi-zone is possible but may affect the cluster public egress addresses,

requires manual operation, and cannot be easily rolled back.

With legacy NAT mode only one NAT gateway is created in one of the availability zone, which is not

AZ fault tolerant. Public subnet used for the NAT gateway in legacy mode can change depending on the

configuration of master and worker node groups, which may prevent CloudFormation stack from updating in

some situation.

With multi-zone NAT mode by default a NAT gateway is created for each AZ in which private node groups are

present.

It is also possible to only create NAT gateways in some AZs, and to specify which NAT gateways should be used

by which specific private subnets.

NAT gateways created in multi-zone mode also do not create any issues with any configuration changes in

the clusters, thus never preventing CloudFormation stacks from updating.

spec.location[*].aws.skipPublicSubnetsForPrivateGroups - true/false, undefined (same as false) by default

If true - skip creating empty public subnets for private node groups.

By default Kublr creates an empty public subnet for each AZ in which there is at least one private node group. CIDRs for such public subnets are taken from cidrBlocks.otherPublic property.

These public subnets are necessary for public ELB/NLB created by Kubernetes for Services of type LoadBalancer to be able to connect to worker nodes running in private subnets in corresponding AZs.

Note that even if skipPublicSubnetsForPrivateGroups is true, public subnets may still be created

for NAT gateways for private master and/or worker groups;

Public master subnets will also be created for private master groups if masterElbAllocationPolicy or masterNlbAllocationPolicy requires public load blancer.

It is only possible to fully disable public subnet creation in clusters with:

masterElbAllocationPolicy and masterNlbAllocationPolicy values that do not require public load

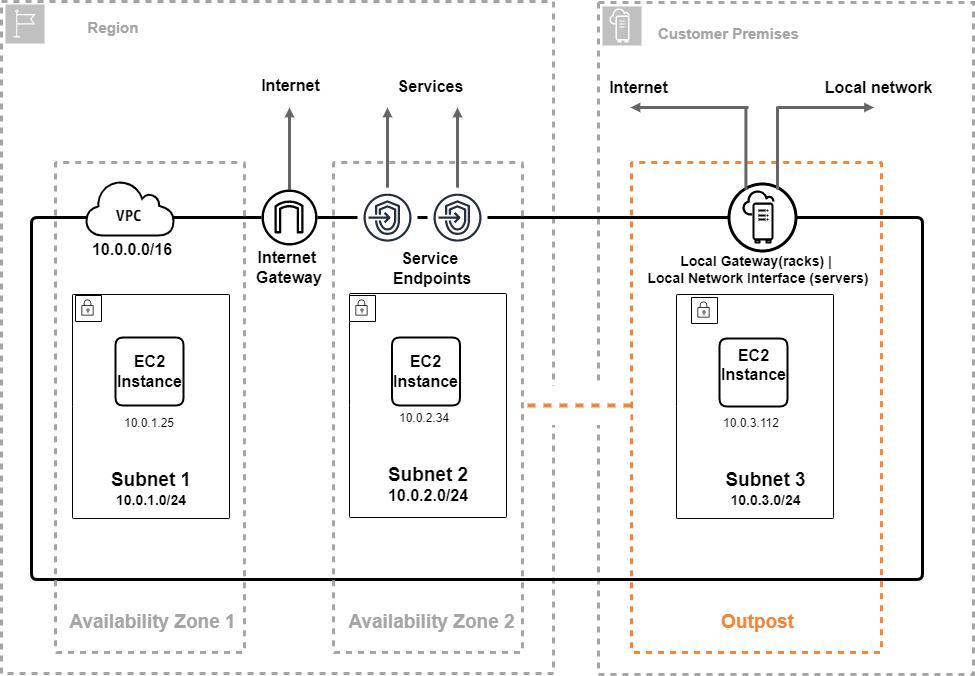

balancer creation (none, private, or auto in single-master cluster)natMode set to noneskipPublicSubnetsForPrivateGroups set to trueAWS Outposts is a fully managed service that extends AWS infrastructure, APIs, and tools to customer premises. By providing local access to AWS managed infrastructure, AWS Outposts enables customers to build and run applications on premises using the same programming interfaces as in AWS Regions, while using local compute and storage resources for lower latency and local data processing needs.

AWS Outposts extends an Amazon VPC from an AWS Region to an Outpost with the VPC components that are accessible in the Region, including internet gateways, virtual private gateways, Amazon VPC Transit Gateways, and VPC endpoints.

An Outpost is homed to an Availability Zone in the Region and is an extension of that Availability Zone that you can use for resiliency.

The following diagram shows the network components for your Outpost.

From Kublr standpoint only two types of resources need to be configured differently to be created in the outpost rather than in the cloud region: subnets and EBS volumes.

To make sure that Kublr cluster is created completely in the Outpost, make sure that the cluster

only uses the availability zone to which the Outpost is homed, and specify the Outpost ARN in

the cluster spec in the spec.locations[*].aws.outpostArn parameter as follows:

spec:

...

locations:

- aws:

region: us-west-2

availabilityZones:

- us-west-2b

outpostArn: arn:aws:outposts:us-west-2:123456789012:outpost/op-17c8c22ad3844dee9

...

Outpost ARN specifieid in spec.locations[*].aws.outpostArn will be automatically propagated to

all subnets and EBS volumes created by Kublr and as a result all cluster nodes will run in the Outpost.

In more complex cases, e.g. when different instance groups need to be run in different Outposts or in the Outposts and in the cloud regions, outpost ARN may be specified on a per-resource basis using Kublr Cloudformation resource override capabilities described in the section Overriding AWS resources generated by Kublr.

The following cluster specification snippet shows an example of such configuration:

spec:

locations:

- aws:

...

region: us-west-2

availabilityZones:

- us-west-2b

# Use existing VPC

vpcId: vpc-0d65fa68ccc7921d2

vpcCidrBlock: '10.0.0.0/16'

cloudFormationExtras:

# add outpost ARN to Properties.OutpostArn property of every subnet created by Kublr

subnet:

Properties:

OutpostArn: arn:aws:outposts:us-west-2:123456789012:outpost/op-17c8c22ad3844dee9

...

master:

locations:

-

aws:

availabilityZones:

- us-west-2b

# create K8S master nodes in an existing subnet

subnetIds:

- 'subnet-0b0aff651dd7f0fb7'

cloudFormationExtras:

# add outpost ARN to Properties.OutpostArn property of every master data EBS voume

masterEBS:

Properties:

OutpostArn: arn:aws:outposts:us-west-2:123456789012:outpost/op-17c8c22ad3844dee9

...