When deploying clusters into different clouds with Kublr, direct access to the cluster’s virtual machines via SSH is prohibited by default. Access is restricted using firewall rules or security groups.

When Kubernetes API is available, to access master nodes for troubleshooting, you can use different tools like node-shell:

kubectl node-shell <YOUR_MASTER_NODE_NAME>

But if Kubernetes API does not respond, you may need SSH access to the master nodes to deal with the problems. How to get this access for different cloud platforms is described in this article.

IMPORTANT! If Kubernetes API is available, it is strongly not recommended to use less secure SSH connection.

To allow SSH access to your master node:

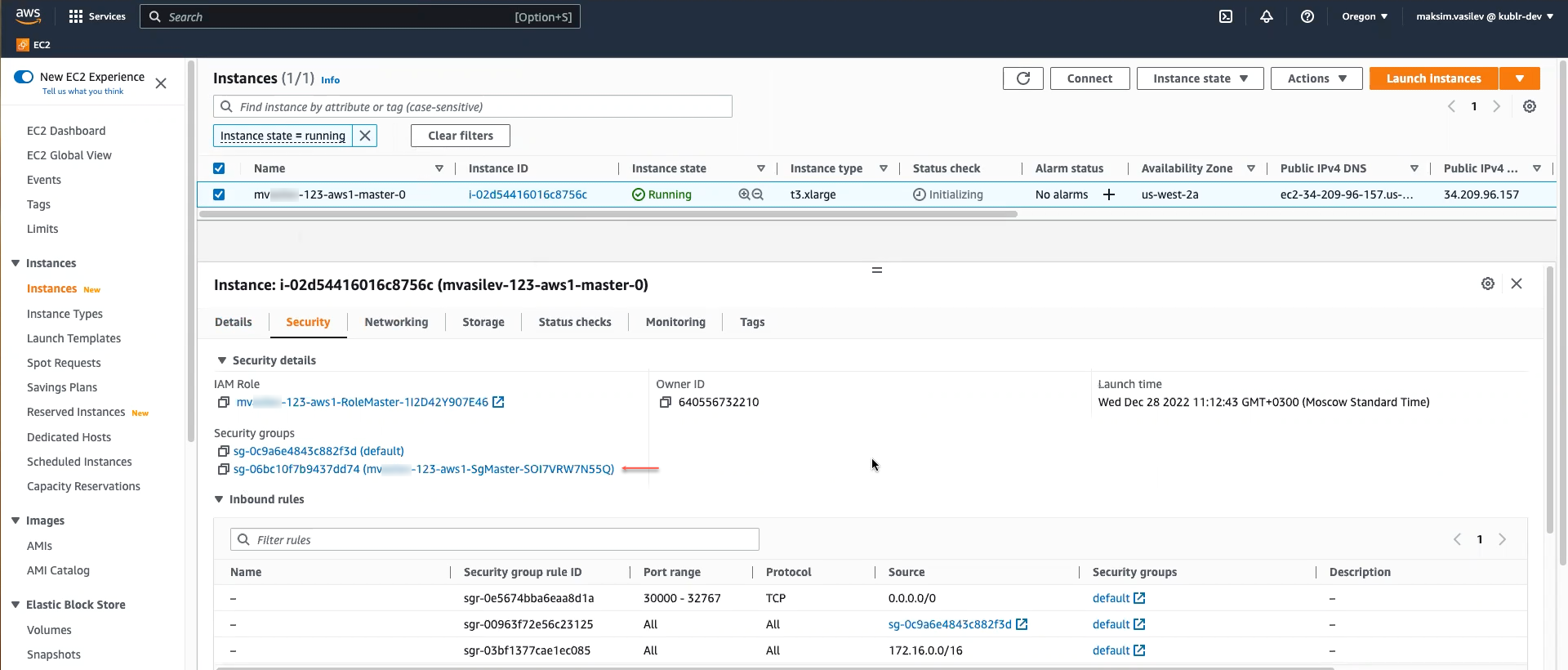

Access AWS E2C console, go to instances and locate your master node instance.

Go to the Security section. Open the security group of your node.

For the security group, add SSH inbound rule via Edit inbound rules → Add rule → add rule of the SSH type. Specify source IP (“access from”) of your need (0.0.0.0/0 stands for “all IPs”).

Save changes and wait until node is initialized.

Test your SSH connection to the master node via its public IPv4 address.

IMPORTANT! It is strongly recommended to deny SSH access after troubleshooting is done. This may be done by deleting the created inbound rule for SSH.

Starting from Kublr release 1.25.0, to access a master node your GCP cluster:

Consider that using the specification:

root as username or its part.Here is the example for the SSH Public Key credentials named e-SSH-credentials with the SSH username changed from default to user-001:

kind: Cluster

metadata:

name: cluster-1676547473

ownerReferences: ...

space: space-001

spec:

...

locations:

master:

locations:

- gcp:

sshKeySecretRef: e-SSH-credentials

sshUsername: user-001

...

Connect via console like:

ssh <YOUR-SSH-USER>@<MASTER-NODE-PRIMARY-IP> -i ~/.ssh/<YOUR-PRIVATE-KEY-FILE>.rsassh user-001@34.28.142.90 -i ~/.ssh/e-SSH-credentials.rsaIt is possible to enable SSH access to the MS Azure master nodes via a property in the cluster specification as follows:

spec:

locations:

- azure:

enableMasterSSH: true

With master SSH enabled Kublr will make the following changes in the cluster:

security rules are created on the network security group that allow access to port 22 on VMs in the master application security group

for the master group of type VirtualMachine, AvailabilitySet, or AvailabilitySetLegacy:

inbound NAT rules are created on the public load balancer for each master with port 2200 for the first master, 2201 for the second one, etc

for the master group of type VirtualMachineScaleSet:

inbound NAT pools are created on the public load balancer for each master VMSS with the port range 2300-2301 for the first master, 2302-2303 for the second one, etc

enableMasterSSH property may be changed on a running cluster and the change does not result in

a cluster downtime.

After SSH access is enabled, use ssh command to connect to the master node:

ssh -i <ssh-pk-file> -p <public-ssh-port> <ssh-username>@<public-loadbalancer-ip>

Here:

ssh-pk-file is the SSH private key file configured for this cluster via properties

spec.locations[*].azure.azureSshKeySecretRef, spec.master.locations[*].azure.sshKeySecretRef,

spec.nodes[*].locations[*].azure.sshKeySecretRef,

and possibly deprecated properties spec.master.locations[*].azure.sshKey,

spec.nodes[*].locations[*].azure.sshKey.

public-ssh-port - 2200, 2201, … or 2300, 2301, 2302, … depending on the master group type and the master number as described above.

ssh-username - ssh username configured by Kublr is by default the same as the cluster name;

it can be overridden for each node group via properties

spec.master.locations[*].azure.sshUsername and spec.nodes[*].locations[*].azure.sshUsername.

public-loadbalancer-ip - public IP of the cluster’s public load balancer.

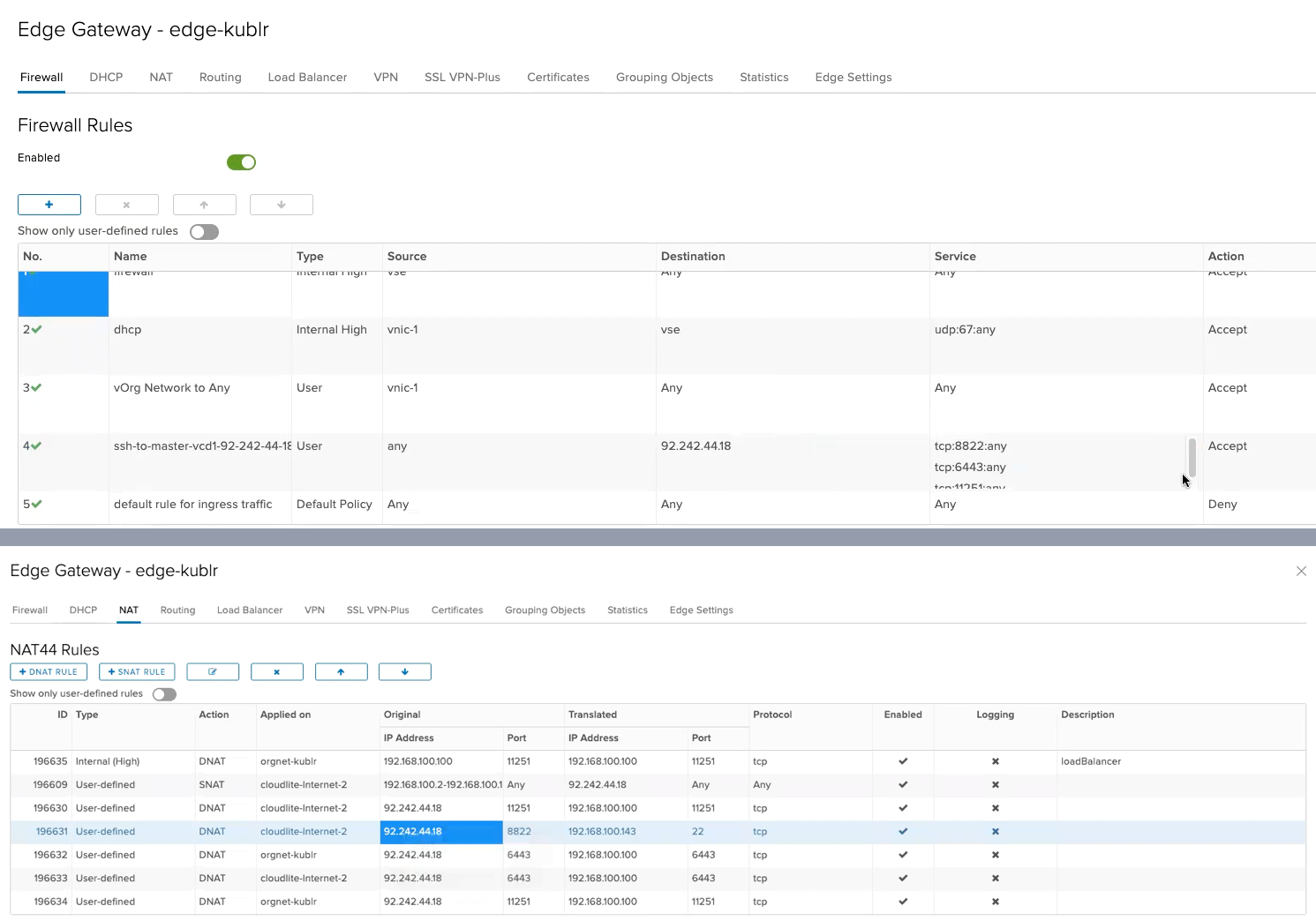

VMware Cloud Director (VCD) uses edge gateways to provide network access to its environments including the SSH access. Thus to access cluster deployed into VCD with Kublr, it is necessary to connect to the IP address provided by the corresponding VCD edge gateway as described in this section.

STEP 1. Edit specification

Include mastersExternalSSHStartPort in a cluster specification. For example:

spec:

...

locations:

- name: vcd1

vcd:

mastersExternalSSHStartPort: 8822

With mastersExternalSSHStartPort, on edge gateway, Kublr will create the SSH firewall rule and NAT (network address translation) rules for your cluster master node(s). This will allow you to connect to the edge gateway public IP and which will be redirected to your master node IP.

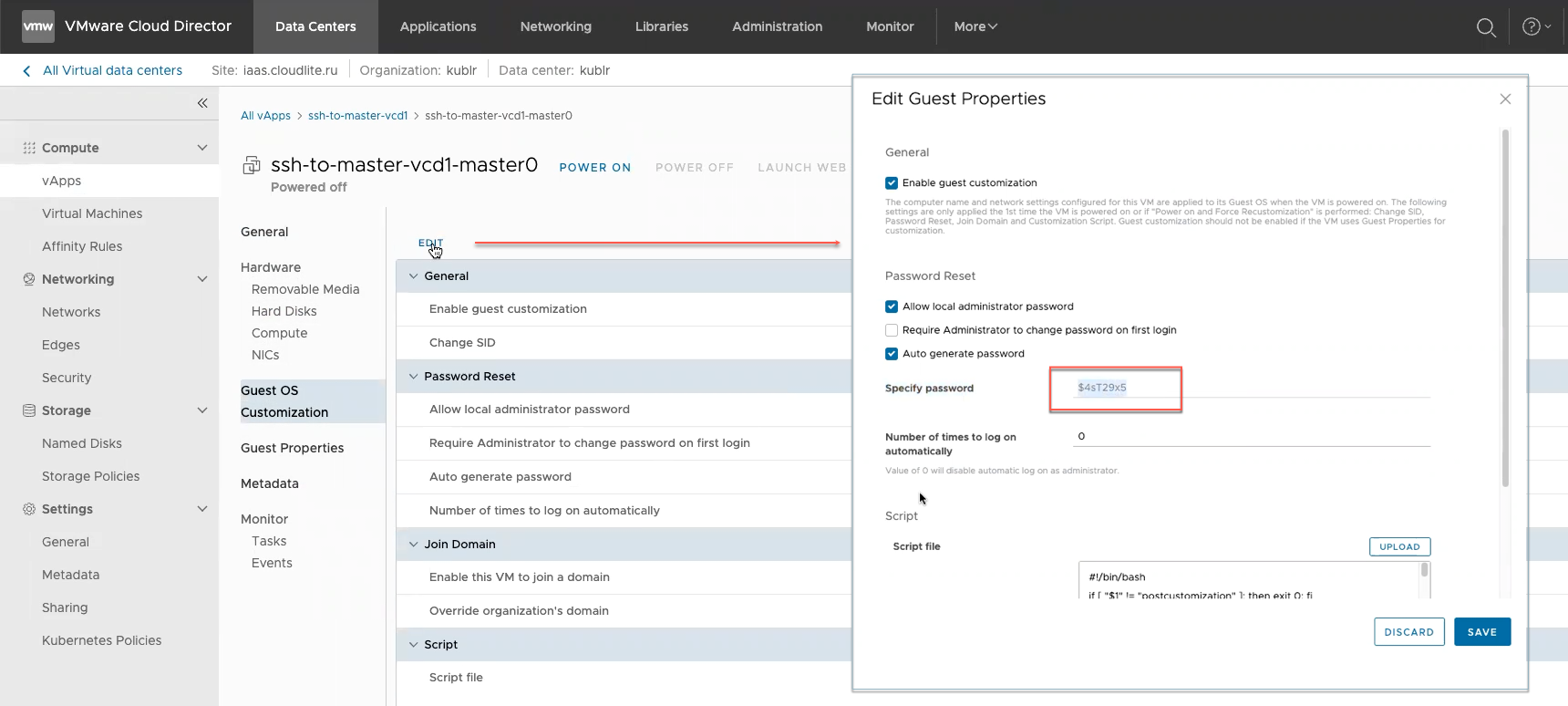

STEP 2. Obtain username and password

In the VMWare Cloud Director console → your data center → Virtual Machines → your master node VM → Guest OS Customization → click Edit → copy value from the Specify password field:

Default username is root.

STEP 3. Connect

Use:

ssh <USERNAME>@<EDGE-GATEWAY-IP> -p <PORT-FROM-SPECIFICATION>

In our example:

ssh root@92.242.44.18 -p 8822

When asked, type in the previously obtained password. You are connected to the master node.